Overview of Scientific Reproducibility

Overview

Teaching: 30 min

Exercises: 15 minQuestions

How is ‘reproducibility’ defined?

Why is scientific reproducibility important?

Objectives

Differentiate among various definitions of ‘reproducibility’ and other related concepts.

Recall historical and contemporary imperatives for scientific reproducibility.

Anyone working in an organization that supports or engages in scientific research has likely encountered discussions about reproducibility. In the past decade, various research stakeholders have become more and more insistent in their calls for data sharing and research transparency as a means of upholding the credibility of the scientific outputs. This episode engages in these discussions and illustrates the various reasons behind demands for reproducibility, beginning with an exploration of the term, “reproducibility.”

Defining “Reproducibility”

Reproducibility, which has emerged as a standard-bearer for scientific integrity, considers what happens when a research study is repeated. For example, a researcher discovers an article that reports study findings, and then decides to conduct the same study to test whether or not the results will be consistent in a subsequent iteration of the study. If the results from the repeated study corroborate those of the original study, then the results in the published article can be considered reproducible.

However, the term “reproducibility” carries important assumptions about the nature of the repeated study. In some contexts, the assumption is that the repeated study applied the same methods and analyzed the same data used by the original study investigator. In other contexts, reproducibility implies that either the original methods or the original data, but not both, were used to assess the integrity of results.

To disambiguate these various meanings, we specify three types of reproducibility that articulate the primary distinctions among the various conceptions of reproducibility defined by Victoria Stodden (2015): empirical reproducibility, statistical reproducibility, and computational reproducibility.

Empirical Reproducibility

original methods + new data

Empirical reproducibility assumes that there is comprehensive documentation of research methods and protocols to follow, and this allows for subsequent studies to follow those same research methods and protocols to collect and analyze data to yield results that corroborate those reported by the original investigators.

In animal studies, repeating experiments to demonstrate empirical reproducibility is expected. This requires standardization of experimental conditions to ensure that inconsistencies in study protocols are not the cause of inconsistencies in results. For mice experiments, this includes the use of lab-specified cage types and temperature, cage cleaning schedules, feeding protocols, etc. Despite careful adherence to standards, it is known that one often overlooked detail can affect the empirical reproducibility of experimental results–the experimenter. For reasons yet to be fully understood, differences among experimenters such as gender and attitude may introduce enough variance in experimental conditions that are the cause of empirical irreproducibility.

Statistical Reproducibility

new methods + original data

Statistical reproducibility relies on the application of appropriate statistical methods to yield defensible results. Studies that suffer from statistical irreproducibility have weaknesses in the statistical methods and analysis, which precludes the possibility of repeating the study and yielding the same results.

Because of the impossibility of studying perpetually-changing conditions of the Earth’s climate system, climate scientists test the reliability of their global temperature projections using a coordinated model intercomparison strategy. With this strategy, researchers run different computational models using the same data inputs and then compare the results produced by each model. Agreement among models demonstrates statistical reproducibility, which suggests the reliability of results. Disagreement, on the other hand, may indicate misapplication of model algorithms or problematic model design indicative of statistical irreproducibility.

Computational Reproducibility

original methods + original data

Computational reproducibility considers scientific practice that relies on computational methods to produce results. Simply put, it is the ability to use the original data and code to produce identical results as those of the original study.

The discovery of a single flaw in programming scripts used to analyze chemistry data called into question over 100 published studies. The issue was a module included in the script that required use of a specific operating system to execute calculations to produce the correct results. Researchers realized this only when they noticed inconsistent results when running the scripts in different computing environments. The inconsistencies, which demonstrate computational irreproducibility, were significant enough to prompt researchers to reconsider the results of their published studies.

Suggested reading:

Stodden, V. (2015). Reproducing statistical results. Annual Review of Statistics and Its Application, 2(1), 1–19. https://doi.org/10.1146/annurev-statistics-010814-020127

Spotlight: Reproducibility vs. Replicability

In discussions of reproducibility, you also may have seen the term replicability used to describe any one of the three definitions provided above. The terms reproducibility and replicability have been used interchangeably and with conflating definitions, ultimately obfuscating their intended meanings. However, it does seem that the scientific community is converging on clearer definitions that capture the nuances of these related terms.

A recent report published by the National Academies of Sciences, Engineering and Medicine (2019) makes a clear distinction between reproducibility and replicability as a means to resolve ambiguities in the use of these and related terms. Their definitions are below as presented in the report:

Reproducibility is obtaining consistent results using the same input data; computational steps, methods, and code; and conditions of analysis. This definition is synonymous with “computational reproducibility[.]”

Replicability is obtaining consistent results across studies aimed at answering the same scientific question, each of which has obtained its own data (p. 46).

Suggested reading:

National Academies of Sciences, Engineering, and Medicine. (2019). Reproducibility and replicability in science. National Academies Press. https://doi.org/10.17226/25303

The following challenge offers an opportunity to apply the definitions of the three types of reproducibility to real-world scenarios. Exploring these scenarios will help to solidify our understanding of the nuances among the reproducibility types and their definitions, and to see how irreproducibility can play out.

Exercise: Reproducibility, Reproducibility, Reproducibility

Read the descriptions of real-life instances in which published research findings were discovered to be non-reproducible. Based on the definitions of reproducibility types, determine which—empirical, statistical, or computational—applies to the scenario in each description.

Scenario 1: Slicing and Dicing Food Data

If you believe that shopping hungry compels you to make poor food purchasing decisions or that the size of your plate can influence how much you eat, you have a single university-based food lab to thank. Over the past three decades, the principal investigator (PI) of this renowned lab had over 250 articles published describing results of studies of food-related behavior, many of which have entered the popular imagination through mainstream media outlets. Some of these studies informed the USDA’s Center for Nutrition Policy and Promotion, which issues dietary guidance for the National School Lunch Program, Supplemental Nutrition Assistance Program (SNAP), and other federally sponsored nutrition programs.A closer look at the lab’s research by other scientists and statisticians, however, revealed considerable problems that would prevent them from repeating the analyses of published works. What they discovered was rampant p-hacking, a dubious practice in which statistical tests are run repeatedly on a dataset in an attempt to yield statistically significant results, irrespective of the research question or hypothesis—if any—at hand. Beyond p-hacking, scientists also found calculation errors including unfeasible means and standard deviations. This was, perhaps, no accident, as evidenced by an email from the PI to a lab member that instructed her to dig through a dataset to find significant relationships among variables, writing, “I don’t think I’ve ever done an interesting study where the data ‘came out’ the first time I looked at it.” A few years later after these discoveries were made, several of the PI’s publications were retracted and an academic misconduct investigation prompted his removal from teaching and research duties at the university.

Scenario 2: Excel Fail

In 2013, a graduate student at the University of Massachusetts Amherst was assigned a class project to select an article from an economics journal and attempt to reproduce its findings. After several attempts, the student was unsuccessful, assuming that he had made some mistake in his own analysis. Even after obtaining the underlying analysis data from the well-respected authors themselves, he was still unable to reproduce the results. Upon closer inspection of the spreadsheet of data he was given, however, he found a critical discovery that would shock the entire field of economics.That discovery was a basic Excel miscalculation, one that tested the veracity of the findings from the influential article that was cited over 4,000 times and used by governments to justify specific austerity measures to address economic crises. While corrections to the analysis yielded different results (which the student later published) from those in the original article, the authors insisted that these differences did not change their central findings. They did, however, acknowledge their error in a public statement: “It is sobering that such an error slipped into one of our papers despite our best efforts to be consistently careful. We will redouble our efforts to avoid such errors in the future.”

Scenario 3: Power(less) Pose

With over 60,000 online views, one of the most popular TED Talks convinced many people that “power posing” induces an actual sense of increased power. The presenter, a social psychologist, shared the results of a study she published along with two of her colleagues that investigated the physiological and behavioral effects of posing in open, expansive postures: wide stance, hands on hips. The authors found that embodiments of power (i.e., power poses) cause a decrease of cortisol (the stress hormone) while increasing testosterone and risk tolerance. Based on these observations, they concluded that power posing manifests actual feelings of power.After other scientists repeated the study and failed to yield comparable results that could demonstrate the robustness of the original power pose findings, some in the scientific community cast doubt on the soundness of the original study design and protocols. One of the most vocal skeptics was one of the co-authors of the original study, who later announced that “I do not believe that ‘power pose’ effects are real.” Aside from the small sample size and a questionable participant selection process, he explained that the experiment involved male and female participants (not distinguished by gender) who performed risk-taking tasks and subsequently were informed that they had won small cash prizes. Because the act of winning increases testosterone levels, there was no way to determine whether the increase in testosterone was an effect of the power pose as the paper concluded, or if it was merely an effect of winning. Despite this controversy, studies on power posing continue–with varying results.

Solution

Scenario 1 (Slicing and Dicing Food Data): Statistical Reproducibility

In this scenario, research results were generated using an inappropriate, dubious statistical technique. Statistical analyses were also rife with errors and miscalculations, which would make it impossible for anyone to arrive at the same results. This example demonstrates lack of statistical reproducibility.Scenario 2 (Excel Fail): Computational Reproducibility

In this scenario, the student attempted to reproduce the findings in a journal article by using the authors’ own data used to generate the results reported in the article. Unfortunately, Herndon was unable to do so, which demonstrates a failure to computationally reproduce the published results.Scenario 3 (Power(less) Pose): Empirical Reproducibility

In this scenario, researchers repeated the steps of the original power pose experiment but arrived at different results. Problems with the study design and protocols rendered the original power pose study empirically irreproducible.

Spotlight: What About Qualitative Research?

Qualitative research methodologies do not rely on analyses of quantitatively measurable variables (other than research that transforms qualitatively derived data into quantitatively analyzable data, e.g., text mining, natural language processing), which is why some scholars have argued that reproducibility standards do not or cannot apply to qualitative research. There is no doubt, however, that findings from qualitative studies must be subject to the same level of scrutiny as those produced by their quantitative counterparts. Certainly, the credibility of qualitative research is just as important as the credibility of quantitative research. Assessing the reproducibility of qualitative research is conceivable, albeit with a focus on transparency.

Considering the more constructivist nature of most qualitative research (in contrast to positivist quantitative research), assessments of research integrity emphasize production transparency and analytic transparency. Production transparency requires that the processes for research participant selection, data collection, and analytic interpretation—and the decisions that informed these processes—be documented in explicit detail. Analytic transparency demands clear descriptions and explanations of the methods and logic used to draw conclusions from the data. For qualitative research, a high degree of production and analytic transparency is prerequisite for demonstrating research rigor and credibility.

Suggested readings:

Elman, C., Kapiszewski, D., & Lupia, A. (2018). Transparent social inquiry: Implications for social science. Annual Review of Political Science, 21, 29–47. https://doi.org/10.1146/annurev-polisci-091515-025429TalkadSukumar, P., & Metoyer, R. (2019). Replication and transparency of qualitative research from a constructivist perspective [Preprint]. Open Science Framework. https://doi.org/10.31219/osf.io/6efvp

The Impetus for Scientific Reproducibility

To appreciate the importance of scientific reproducibility, consider the belief that autism is linked to vaccines. This notion first appeared in a 1998 article by Andrew Wakefield and his colleagues, who reported results of a study of a small group of children who had received the MMR vaccine and were later diagnosed with autism. A subsequent examination of the study protocols and data from health records, however, revealed that no interpretation of the data could have reasonably concluded that instances of autism diagnoses were linked to the vaccine. The Wakefield articles eventually were retracted by the Lancet journal that published them, and Wakefield was found guilty of scientific misconduct and fraud. Our understanding of the relationship between autism and vaccines is more reliably supported by the many studies and meta-analyses of studies on the subject that have consistently shown that vaccines do not cause autism.

For scientific claims to be credible, they must be able to stand up to scrutiny, which is a hallmark of science. The scientific community promotes the credibility of its claims through systems of peer review and research replication. Confirmation of the reproducibility of research results adds another necessary element of research checks and balances, particularly with scientific research increasingly becoming computationally intensive. The ability of a researcher to obtain and use the data and analysis code from the author of published scientific findings to reproduce those findings independently is an essential standard by which the scientific community can judge the integrity of the scientific record.

Despite the vaccine-autism link having been disproven, Wakefield’s research continues to be cited in anti-vaccine campaigns that spread misinformation and disinformation about so-called dangers of vaccines. Such campaigns have contributed to vaccine hesitancy, which has hindered public health initiatives to reduce the spread of pandemic diseases through vaccination. This example underscores the importance of recent calls for reproducibility as a precondition of scientific publication.

Suggested readings:

Motta, M., & Stecula, D. (2021). Quantifying the effect of Wakefield et al. (1998) on skepticism about MMR vaccine safety in the U.S. PLOS ONE, 16(8), e0256395. https://doi.org/10.1371/journal.pone.0256395

Ullah, I., Khan, K. S., Tahir, M. J., Ahmed, A., & Harapan, H. (2021). Myths and conspiracy theories on vaccines and COVID-19: Potential effect on global vaccine refusals. Vacunas, 22(2), 93–97. https://doi.org/10.1016/j.vacun.2021.01.001

The Reproducibility Mandate

There are plenty of high-profile examples of research that were found to be irreproducible, prompting the question of whether or not science is experiencing a “reproducibility crisis.” Recent studies to determine the extent to which published research is or is not reproducible have been concerning to many given that investigators were unable to successfully reproduce findings from a significant portion of the published research examined by investigators.

In a 2016 survey conducted by the journal Nature, researchers were asked if there was a reproducibility crisis in science. Of the 1,576 who responded to the survey, 90% agreed that there was at least a slight crisis. 70% conceded that they were unable to reproduce a study conducted by another scientist, with over half admitting to being unable to reproduce a study that they, themselves, conducted!

The reasons for irreproducibility are plenty, but the issues that appear quite often are the result of scientific practices that overlook the data management and curation activities: missteps in analysis workflows due to gaps in documentation, ethical and/or legal violations of non-anonymized human subjects data or redistribution of restricted proprietary data, code execution failures because of computing environment mismatches, inconsistent analysis outputs from use of the wrong data file versions, and lack of access of data and code to reproduce results. Despite these seemingly minor issues, the repercussions can be serious.

Spotlight: Yet Another Term: “Preproducibility”

In a 2018 article published in the journal Nature, Phillip Stark offered another term for consideration for use in discussions of reproducibility: preproducibility. He wrote, “An experiment or analysis is preproducible if it has been described in adequate detail for others to undertake it. Preproducibility is a prerequisite for reproducibility…” (p. 613). The question of reproducibility, according to Stark, is irrelevant if documentation of research methods and protocols are insufficient or unavailable. Stark declared his unequivocal stance on the subject, declining to review any manuscript that is not preproducible.

Read the article that introduces the concept of preproducibility here:

Stark, P. B. (2018). Before reproducibility must come preproducibility. Nature, 557(7707), 613–613. https://doi.org/10.1038/d41586-018-05256-0

Perhaps with the so-called reproducibility crisis in mind, various research stakeholders have taken steps to promote and protect the integrity of scientific research by issuing policies and/or guidelines that require researchers to perform data management tasks to ensure data are accessible and usable. This includes the funding agencies that support research initiatives, scholarly journals that publish the scientific record, and academic societies that establish standards for research conduct.

Funding Agencies

Funding agencies make billions of dollars in scientific investments by sponsoring grant programs that provide support to research projects. To maximize their investments, many funders have issued policies that require grant awardees to make the materials produced in the course of funded research activities publicly available. By doing so, the scientific community can reuse datasets, analysis code, and other research artifacts to extend and verify results. Below are examples of funding agencies that have data policies in place.

| Funding Agency | Summary |

|---|---|

| Alfred P. Sloan Foundation | “Scientific progress depends on the sharing of information, on the replication of findings, and on the ability of every individual to stand of the shoulders of her predecessors…potential grantees are asked to attend to the outputs their research will create and how those outputs can best be put in service to the larger scientific community.” |

| Institute for Museum and Library Services (IMLS) | “The digital products you create with IMLS funding require effective stewardship to protect and enhance their value, and they should be freely and readily available for use and reuse by libraries, archives, museums, and the public.” |

| NASA | “…scientific data product algorithms and data products or services produced through the [Earth Science] program shall be made available to the user community on a nondiscriminatory basis, without restriction, and at no more than the marginal cost of fulfilling user requests.” |

| National Institutes of Health (NIH) | “The National Institutes of Health (NIH) Policy for Data Management and Sharing (herein referred to as the DMS Policy) reinforces NIH’s longstanding commitment to making the results and outputs of NIH-funded research available to the public through effective and efficient data management and data sharing practices.” |

| National Science Foundation (NSF) | “Investigators are expected to share with other researchers, at no more than incremental cost and within a reasonable time, the primary data, samples, physical collections and other supporting materials created or gathered in the course of work under NSF grants. Grantees are expected to encourage and facilitate such sharing.” |

Scholarly Journal Publishers

As stewards of the scientific record, journals bear responsibility for ensuring that the articles they publish contain verifiable claims. Moreover, journals recognize the role they play in traditional tenure and promotion structures, which appraise scientific productivity based on publication of articles in scholarly journals. Thus, journal policies that make publication contingent on data sharing and verification are considered a promising tool for advancing research reproducibility. Below are examples of journals with rigorous data policies.

| Scholarly Journal | Summary |

|---|---|

| American Journal of Political Science (AJPS) | “The corresponding author of a manuscript that is accepted for publication in the American Journal of Political Science must provide materials that are sufficient to enable interested researchers to verify all of the analytic results that are reported in the text and supporting materials…When the draft of the manuscript is submitted, the materials will be verified to confirm that they do, in fact, reproduce the analytic results reported in the article.” |

| American Economic Association (AEA) Journals | “Authors of accepted papers that contain empirical work, simulations, or experimental work must provide, prior to acceptance, information about the data, programs, and other details of the computations sufficient to permit replication, as well as information about access to data and programs…The AEA Data Editor will assess compliance with this policy, and will verify the accuracy of the information prior to acceptance by the Editor.” |

| eLife | “Regardless of whether authors use original data or are reusing data available from public repositories, they must provide program code, scripts for statistical packages, and other documentation sufficient to allow an informed researcher to precisely reproduce all published results.” |

| Personality Science | “Personality Science (PS) takes good, transparent, reproducible, and open science very seriously. This means that all published papers will have underwent screening regarding to what extent they have fulfilled Transparency and Openness Promotion (TOP) Guidelines.” |

| Science | “All data used in the analysis must be available to any researcher for purposes of reproducing or extending the analysis…In general, all computer code central to the findings being reported should be available to readers to ensure reproducibility…Materials/samples used in the analysis must be made available to any researcher for purposes of directly replicating the procedure.” |

Academic Societies

Academic societies have taken up the issue of reproducibility in updated professional codes of conduct or ethics. Citing responsibility to advance research in their discipline, these formal documents obligate researchers to enable others to evaluate their knowledge claims through transparent research practices and public access to data and materials underlying those knowledge claims. Below is a list of academic societies that have issued policies or statements promoting data access and research transparency.

| Academic Society | Summary |

|---|---|

| American Geophysical Union (AGU) | “The cost of collecting, processing, validating, documenting, and submitting data to a repository should be an integral part of research and operational programs. The AGU scientific community should recognize the professional value of such activities.” |

| American Psychological Association (APA) | “After research results are published, psychologists do not withhold the data on which their conclusions are based from other competent professionals who seek to verify the substantive claims through reanalysis and who intend to use such data only for that purpose, provided that the confidentiality of the participants can be protected and unless legal rights concerning proprietary data preclude their release.” |

| American Sociological Association (ASA) | “Consistent with the spirit of full disclosure of methods and analyses, once findings are publicly disseminated, sociologists permit their open assessment and verification by other responsible researchers, with appropriate safeguards to protect the confidentiality of research participants…As a regular practice, sociologists share data and pertinent documentation as an integral part of a research plan.” |

Key Points

The term ‘reproducibility’ has been used in different ways in different disciplinary contexts.

Computational reproducibility, which is the focus of this and follow-up lessons, refers to the duplication of reported findings by re-executing the analysis with the data and code used by the original author to generate their findings.

Scientific reproducibility is not a novel concept, but one that has been reiterated by prominent scholars throughout history as a cornerstone of scientific practice.

Failed attempts to reproduce published scientific research are considered by some to be reflective of an ongoing crisis in scientific integrity.

Stakeholders have taken note of the importance of reproducibility and thus have issued policies requiring researchers to share their research artifacts with the scientific community.

Reproducibility Standards

Overview

Teaching: 30 min

Exercises: 15 minQuestions

What makes research reproducible?

How does a research compendium support reproducibility?

What are common obstacles to making research reproducible?

Objectives

Explain the requirements for meeting the reproducibility standard.

Describe the contents of a research compendium.

Recall some of the challenges that can hinder efforts to make research reproducible.

In the previous episode, we established our working definition of “reproducibility” as being able to reproduce published results given the same data, code, and other research artifacts originally used to execute the computational workflow. In this episode, we go beyond the definition of reproducibility to explore the standards by which we evaluate reproducibility.

Reproducibility Standards

In 1995, the Harvard University professor of political science, Gary King, proposed a new “replication standard,” which he considered imperative to the discipline’s ability to understand, verify, and expand its scholarship (here, King uses the term “replication,” but his use is analogous to our working definition of reproducibility):

The replication standard holds that sufficient information exists with which to understand, evaluate, and build upon a prior work if a third party could replicate the results without any additional information from the author (p. 444).

Accessibility and usability of the materials necessary to reproduce reported research results without having to resort to intervention from the original author is central to King’s standard, and, as you will learn, are at the core of curation for reproducibility practices.

Read King’s seminal article here:

King, G. (1995). Replication, replication. PS: Political Science & Politics, 28(3), 444–452. https://doi.org/10.2307/420301

Spotlight: FAIR Principles

Mention of the FAIR Principles has become ubiquitous in discussions of research data management. Some funding agencies have even cited the FAIR Principles in documents that provide guidance for their data management policies. FAIR, which stands for findable, accessible, interoperable, and reusable, was developed as a set of guiding principles that help to sustain and enhance the value of data for scientific discovery, knowledge-making, and innovation, while avoiding the technical obstacles that can challenge those goals.

In the summary of the FAIR Principles below, pay particular attention to the Reusable principle, which when thinking about reproduction as one type of reuse, aligns directly with some of the standards we uphold when curating for reproducibility.

Findable

The data are uniquely identified and described using machine-readable metadata to enable both systems and humans to find the data in a searchable system.

F1. (Meta)data are assigned a globally unique and persistent identifier

F2. Data are described with rich metadata (defined by R1 below)

F3. Metadata clearly and explicitly include the identifier of the data they describe

F4. (Meta)data are registered or indexed in a searchable resourceAccessible

Access to the data (or descriptive metadata should the data no longer be available or require certain procedures for access) using the unique identifier assigned to the data is possible without the need for specialized tools or services.

A1. (Meta)data are retrievable by their identifier using a standardized communications protocol

A1.1 The protocol is open, free, and universally implementable

A1.2 The protocol allows for an authentication and authorisation procedure, where necessary

A2. Metadata are accessible, even when the data are no longer availableInteroperable

The data and descriptive metadata are standardized to enable exchange and interpretation of data by different people and systems.

I1. (Meta)data use a formal, accessible, shared, and broadly applicable language for knowledge representation.

I2. (Meta)data use vocabularies that follow FAIR principles

I3. (Meta)data include qualified references to other (meta)dataReusable

The data are presented with enough detail that it is clear to designated users the origins of the data, how to interpret and use the data appropriately, and by whom and for what purposes the data may be used.

R1. (Meta)data are richly described with a plurality of accurate and relevant attributes

R1.1. (Meta)data are released with a clear and accessible data usage license

R1.2. (Meta)data are associated with detailed provenance

R1.3. (Meta)data meet domain-relevant community standardsRead more about the FAIR Principles here:

GO FAIR. (n.d.). FAIR Principles. GO FAIR. Retrieved May 6, 2022, from https://www.go-fair.org/fair-principles/Wilkinson, M. D., Dumontier, M., Aalbersberg, Ij. J., Appleton, G., Axton, M., Baak, A., Blomberg, N., Boiten, J.-W., da Silva Santos, L. B., Bourne, P. E., Bouwman, J., Brookes, A. J., Clark, T., Crosas, M., Dillo, I., Dumon, O., Edmunds, S., Evelo, C. T., Finkers, R., … Mons, B. (2016). The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data, 3, 160018. https://doi.org/10.1038/sdata.2016.18

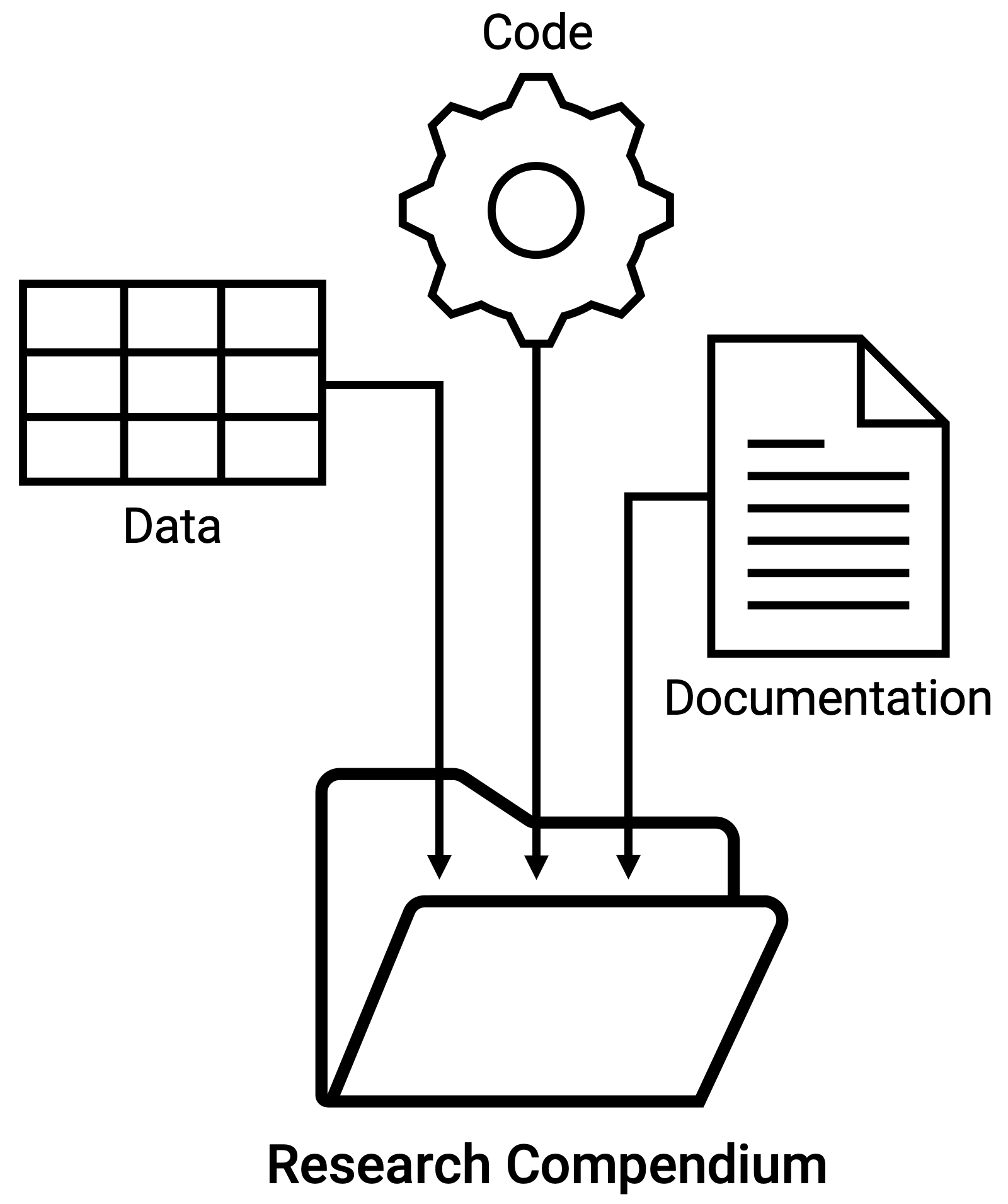

The Research Compendium

The first known mention of the research compendium concept appeared in an article by Gentleman and Lang (2007), who proposed the compendium as a “new mechanism that combines text, data, and auxiliary software into a distributable and executable unit” (p. 2). Noting the challenges of assembling the artifacts needed to re-run a statistical analysis to computationally reproduce published research results, Gentleman and Lang argued the need to capture the steps of the analytical workflow in a compendium containing one or more “dynamic documents” that encapsulate the description of the analysis (manuscript and documentation), the analysis inputs (data and code), and the computing environment (computational and analytical software).

To put it more simply for this Curating for Reproducibility curriculum, we refer to the research compendium as the collection of the research artifacts necessary to independently understand and repeat the entirety of the analysis workflow from data processing and transformation to producing results.

At a minimum, the research compendium, which may also referred to as reproducibility file bundle or reproducibility package as we define it, contains the following research artifacts:

- Data. Dataset files including the raw or original data, and constructed data used in the analysis.

- Code. Processing scripts used to construct analysis datasets from raw or original data, and analysis scripts that generate reported results.

- Documentation. Information that provides sufficient information to enable re-execution of the analysis workflow including data dictionaries, data availability statements for restricted data, code execution instructions, computing environment specifications, and other necessary details to reproduce results.

The research compendium supports the reproducibility standard by providing access to all of the information, materials and tools necessary to independently reproduce the associated results. Curating for reproducibility applies curation actions to the research compendium.

Spotlight: Curating for Reproducibility: Beyond Data

Curating for reproducibility requires that we think beyond data as the singular object of curation. When considered alone, data that are free of errors, clean of personally identifiable information, and well-documented may be deemed high-quality.

In the context of reproducibility, however, quality standards are applied to the research compendium, of which data is but one component. If an attempt to independently reproduce reported results using the compendium fails, then a quality assessment of the data alone is insufficient.

Curating for reproducibility considers the research compendium as the object of curation, with the goal of ensuring that all of the artifacts contained within it capture all of the materials, information, computational workflows, and technical specifications needed to re-execute the analysis to reproduce published results.

Independently Understandable for Informed Reuse

Curation in support of scientific research has centered commonly on data curation to support long-term access to and reuse of high-quality datasets. It is useful to think about quality not so much as attributes of the data, but as a “set of measures that determine if data are independently understandable for informed reuse” (Peer et al., 2014).

Independent Understandability

Independent understandability is a key curation concept that refers to the ability of anyone unrelated to the production of the data to interpret and use the data without needing input from the original producer(s). That means that in order to enable reuse, data need to be processed, documented, shared, and preserved in a way that ensures that they are “independently understandable to (and usable by) the Designated Community” (a Designated Community is “an identified group of potential Consumers who should be able to understand a particular set of information….[it] is defined by the Archive and this definition may change over time,” (CCSDS, 2012)).

Reusability

The other important concept in data curation is reusability. Motivations for reuse of extant data are varied and include data verification, new analysis, re-analysis, or meta-analysis. Reproduction of original analysis and results, which is the focus of this curriculum, is a type of reuse that sets an even higher bar for quality because it requires that code and detailed documentation–not only the data–to be curated and packaged into a research compendium to allow regeneration of corresponding published results.

Spotlight: 10 Things for Curating Reproducible and FAIR Research

The measures used to determine the quality of a research compendium intended to be used to reproduce reported results are described in the 10 Things for Curating Reproducible and FAIR Research. The 10 Things, summarized below are the output of the Research Data Alliance’s CURE-FAIR Working Group. This international community of information professionals, researchers, funding agencies, publishers, and others interested in promoting reproducibility practices worked together to identify and describe specific requirements for making research reproducible.

CURE-FAIR Working Group. (2022). 10 things for curating reproducible and FAIR research. Research Data Alliance. https://doi.org/10.15497/RDA00074

Knowing what makes research reproducible allows us to recognize research that is not reproducible. The next challenge revisits the scenarios from the challenge in the previous episode to get us thinking about the causes of irreproducibility and their potential consequences.

Exercise: The Impact of Irreproducibility

Consider again the three scenarios from the exercise in Episode 1 (“Reproducibility, Reproducibility, Reproducibility”). Provide responses to the following questions about each scenario.

- What were the causes of non-reproducibility?

- What may have been some consequences of the discovery that the study was not reproducible?

- What could the researchers of the original study have done differently to avoid the issues that rendered the research non-reproducible?

Solution

Scenario 1 (Slicing and Dicing Food Data): All of the research outputs from the food lab have been called into question since the discovery of various problems in studies published by the PI of the lab. The nutrition policies and programs in which the PI played a part may need to be reconsidered in light of accusations of scientific misconduct. The PI’s published research findings were not reproducible because of his failure to provide access to documentation and data that could demonstrate the integrity of his analytic methods and results. Without it, his scientific claims are indefensible. Beyond providing access to documentation, data, and code, pre-registration could have been an effective strategy for preventing the problems seen in the lab’s work and making results analytically reproducible. Pre-registering a study obliges researchers to declare their hypothesis, study design, and analysis plan prior to the start of research activities. Deviations from the plan require documented explanation, which creates greater research transparency.

Scenario 2 (Excel Fail): Governments who implemented austerity measures based on the findings in the original article may not have yielded the expected outcomes and instead exacerbated the economic crisis in their respective countries as a result. The causes of non-reproducibility were primarily due to errors made in the Excel spreadsheet used by the original authors for calculations. Rather than rely on a spreadsheet program, the authors could have used statistical software designed for data analysis to write and execute code to generate results. The code itself reveals the analytic steps taken to arrive at the reported results, which would have enabled the authors (and secondary users) to inspect and verify the validity of their analytic workflow and outputs and promote computational reproducibility.

Scenario 3 (Power(less) Pose): The publication of the power pose research and the scrutiny it was met with happened in the midst of heated arguments among psychologists about the scientific rigor of research produced in their disciplinary domain. Some scholars in the field took umbrage against published studies that presented seemingly unlikely findings and set to work to assess the validity of these findings. What they discovered was widespread abuse of researchers’ degrees of freedom as a means of generating positive, and likely more publishable, results. Because of the publicity it received, this research became something of a poster child of the so-called reproducibility crisis in psychology with its questionable methods and perhaps overstated positive results. This cast widespread doubt not only on those particular research findings, but also on those from the field of psychology writ large. Clear and comprehensive documentation and justification of research protocols used in the power pose experiments would have helped bolster the empirical reproducibility of the research claims.

Challenges to Reproducibility

While much of the research community and its stakeholders have reached the consensus that reproducibility is imperative to the scientific enterprise, it must be acknowledged the challenges that come with efforts to make research reproducible. Indeed for some situations, reproducibility is much easier said than done.

Human Subjects Research

Research that involves human subjects are bound by laws and regulations that place restrictions on data sharing, disallowing their dissemination for any purpose to include verifying the reproducibility of the results of that research. To avoid sanctions from unknowingly failing to protect the identities of study participants, researchers will often decline to share the data or opt to destroy the data upon completion of their analyses.

Copyright and Intellectual Property Rights

As with human subjects research, investigations that use proprietary data and/or code from commercial sources often cannot be redistributed because they are subject to licensing restrictions or intellectual property laws that disallow doing so. Since access to the data and materials underlying research results is a basic requirement for substantiating published claims, proprietary or otherwise restricted data stand as an obstacle to research reproducibility.

Technological Hurdles

Despite having the same data, code, and other research materials used by the original investigator, attempts to computationally reproduce scientific results can prove quite difficult when the technology needed to re-execute the analysis presents challenges. Some analyses require computing environments with exacting specifications that are difficult to recreate. Others are resource-intensive, demanding the processing power of high-performance computing that may not be readily accessible.

Time and Effort

Reproducible research requires the availability of code written using literate programming and includes non-executable comments indicating the function of code blocks; datasets accompanied by codebooks or data dictionaries that define each variable and its categorical value codes; readme files that describe the process for executing the analysis; and any other materials necessary to independently execute the analysis to generate outputs identical to the original results. Preparing these materials may take a great deal of time and effort that researchers just might not have, especially when there is little or no incentive to do so.

Are these challenges insurmountable? Not always. In many cases, strategies exist that account for these challenges to make it possible to still uphold reproducibility standards. In Lesson 4: Compendium Packaging and throughout the Curating for Reproducibility Curriculum, you will learn about these strategies and how to apply them to special cases involving technical complexities, sensitive human subjects data, and restricted proprietary data.

Spotlight: A Reproducibility Dare

When Nicolas Rougier and Konrad Hinsen, the originators of the Ten Years Reproducibility Challenge, issued an invitation to researchers to find the code they used to generate results presented in any article published before 2010 and then use the unedited code to reproduce the results, they suspected that few would succeed.

Ten years is considered an eternity when it comes to the longevity of computations. Rapidly changing technologies render hardware and software obsolete, and evolving computational approaches relegate once novel programming languages to outdated status–in significantly less time than ten years. The 35 entrants confirmed this to be the case, having to resort to using hardware emulators or purchases from online vendors to obtain old hardware, reaching into the depths of memory to revive fading programming language fluency, and confronting poor coding practices that have since been remedied by subsequent years of experience. The difficulty of the challenge cannot be overstated.

One crucial takeaway that participants noted was the importance of documentation to preserve the critical information about where to locate the code, what hardware and software are needed to replicate the original computing environment, and how to run the code to successfully generate expected outputs. While constantly evolving technology can make reproducibility elusive, comprehensive documentation is the key to making it at all possible.

Learn more about the Ten Years Reproducibility Challenge here:

Perkel, J. M. (2020). Challenge to scientists: Does your ten-year-old code still run? Nature, 584(7822), 656–658. https://doi.org/10.1038/d41586-020-02462-7

Key Points

Reproducible research requires access to a “research compendium” that contains all of the artifacts and documentation necessary to repeat the steps of the analytical workflow to produce expected results.

Curating for reproducibility goes beyond curating data; it applies curation actions to all of the research artifacts within the research compendium to ensure it is independently understandable for informed reuse.

Despite calls for reproducible research, challenges exist that can make it difficult to achieve this standard.

Scientific Reproducibility and the LIS Professional

Overview

Teaching: 30 min

Exercises: 15 minQuestions

What is the role of the LIS professional in supporting reproducible research?

What are the requisite skills and knowledge for executing data curation for reproducibility workflows?

In what ways can data curation for reproducibility activities be incorporated into services?

Objectives

Explain how curation for reproducibility differs from common models of data curation that focuses on data as the object of curation.

Describe what it means to be a data savvy librarian.

Provide examples of curation for reproducibility service implementation.

There are several stakeholders that contribute to reproducibility. The researcher who incorporates data management activities in their research workflows, the funding agencies who mandate data sharing, the repository that provides a platform for making the data available, and the journal editor who checks that authors provide information on how to access their data all play a role in promoting reproducibility. This episode explores why library and information science (LIS) professionals are also an important part of this endeavor and how they can support it.

The Role of the LIS Professional

Researchers are becoming more aware of services that support reproducibility standards, many of which fall within the domain of the LIS professional. They have made note that “libraries, with their long-standing tradition of organization, documentation, and access, have a role to play in supporting research transparency and preserving…’the research crown jewels’” (Lyon, Jeng, & Mattern, 2017, p. 57). Indeed, appraising the value of materials, arranging and describing them, creating standardized metadata, assigning unique identifiers, and other common library and archives tasks are applicable to curation for reproducibility.

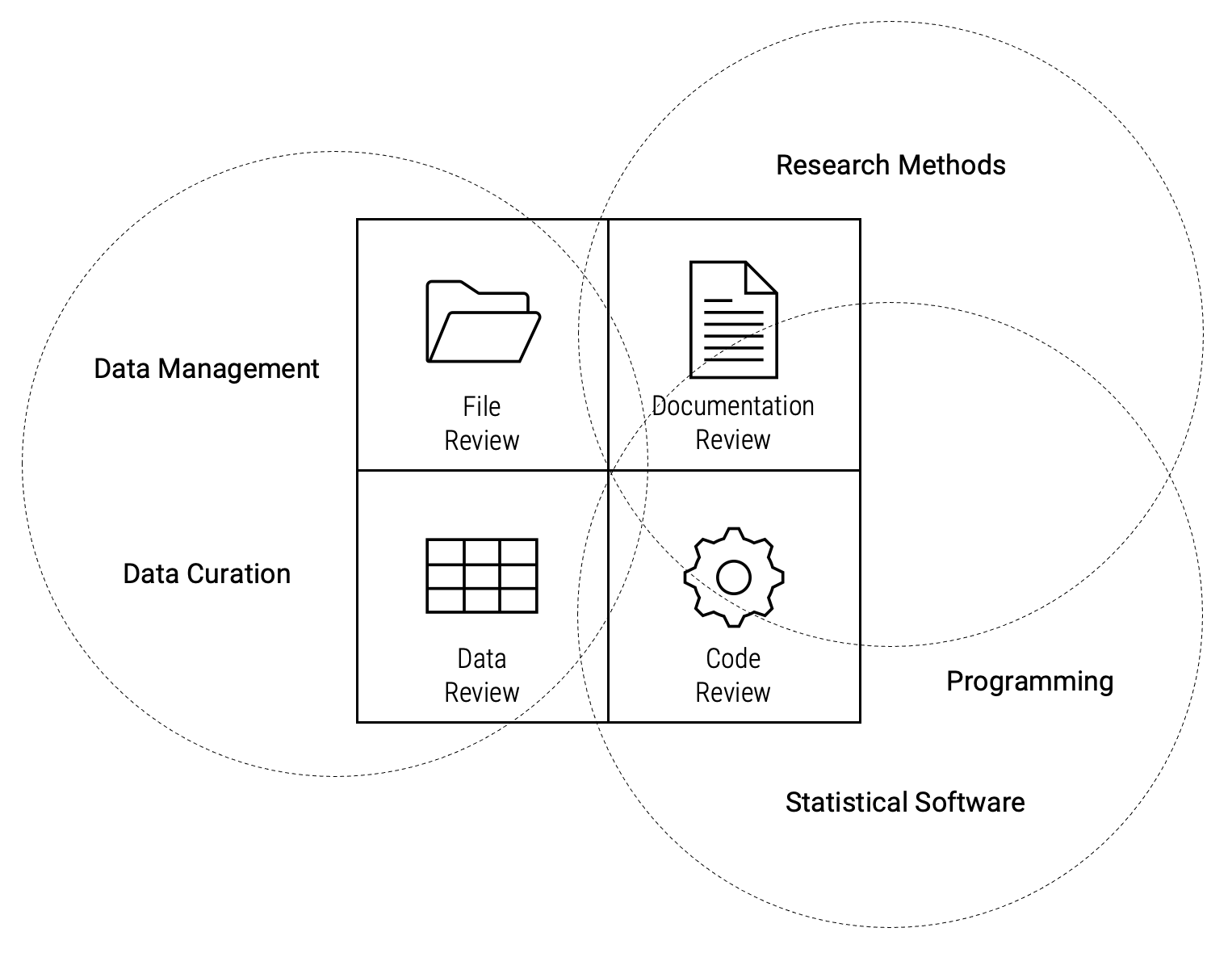

It is important to acknowledge that accepting this type of role introduces additional demands on the LIS toolbox that calls for some degree of subject knowledge and technical skills to engage in rigorous data curation activities that support sustained access and use of high-quality research materials to promote scientific reproducibility i.e., data curation for reproducibility.

The “data savvy librarian” is one who can execute data curation for reproducibility workflows because they:

- can apply the fundamentals of digital preservation to ensure that research materials are discoverable, accessible, understandable, and reusable into the future;

- have the technical skills to assess the quality of research materials produced by computational research projects; and

- are familiar with the research lifecycle including the methods, workflows, and tools of the disciplinary domain in which data are collected or generated, transformed, and analyzed.

The diagram below illustrates how various areas of skills and knowledge apply to primary components of data curation for reproducibility as they are defined by the Data Quality Review Framework (see Lesson 2 for an in depth discussion of each component of the Data Quality Review Framework).

(Optional) Read more about the data savvy librarian and their role in supporting scientific reproducibility:

Barbaro, A. (2016). On the importance of being a data-savvy librarian. JEAHIL, 12(1), 25–27. http://ojs.eahil.eu/ojs/index.php/JEAHIL/article/view/100/104

Burton, M., Lyon, L., Erdmann, C., & Tijerina, B. (2018). Shifting to data savvy: The future of data science in libraries. University of Pittsburgh. http://d-scholarship.pitt.edu/id/eprint/33891

Kouper, I., Fear, K., Ishida, M., Kollen, C., & Williams, S. C. (2017). Research data services maturity in academic libraries. In Curating research data: Practical strategies for your digital repository (pp. 153–170). Association of College and Research Libraries. https://doi.library.ubc.ca/10.14288/1.0343479

Lyon, L., Jeng, W., & Mattern, E. (2017). Research transparency: A preliminary study of disciplinary conceptualisation, drivers, tools and support services. International Journal of Digital Curation, 12(1), 46. https://doi.org/10.2218/ijdc.v12i1.530

Pryor, G., & Donnelly, M. (2009). Skilling up to do data: Whose role, whose responsibility, whose career? International Journal of Digital Curation, 4(2), 158–170. https://doi.org/10.2218/ijdc.v4i2.105

Sawchuk, S. L., & Khair, S. (2021). Computational reproducibility: A practical framework for data curators. Journal of eScience Librarianship, 10(3), 1206. https://doi.org/10.7191/jeslib.2021.1206

Spotlight: CuRe Career Pathways

Curating for reproducibility requires a set of skills that are rare for any one person to have. Pryor and Donnelly (2009) remarked that careers in data curation are often “accidental” in the absence of established career pathways. Indeed, how people obtain the skills to fill professional roles that include data curation for reproducibility responsibilities can be very different. Consider these examples:

A researcher with years of experience engaged in computational science comes to understand the importance of data curation after responding to data sharing demands from journals, funding agencies, and other researchers. Experiencing the benefits of managing their data to enable sharing, the researcher makes a career transition to become a data manager for a research lab. In this new role, they use their domain expertise to communicate effectively with researchers as they incorporate data curation activities into the lab’s research workflows.

A librarian, who took several graduate-level courses in digital archives and records management, works for an academic library that is expanding its research support services. Because of the relevance of knowledge gained from their graduate studies, the librarian is assigned a data curation role that includes performing quality review and ingesting research data into the institutional repository. After engaging with researchers to understand their data needs and taking classes in statistical software, the librarian is able to include curation for reproducibility activities into the repository ingest workflow.

Among the editorial staff of some scholarly journals is a data editor, who is responsible for enforcing the journal’s strict data policies that require authors to submit their research compendium for review prior to article publication. The data editor uses their domain expertise, computational skills, and understanding of data quality standards to evaluate results for reproducibility. In this role, the data editor also provides guidance to authors to encourage them to curate their research compendium before submitting them for review.

Regardless of the path that leads one to a career that involves curation for reproducibility, they are part of an emerging workforce equipped with a unique set of skills that are highly sought by scientific stakeholders that demand reproducible research.

Reproducibility Services

Founded in 1947, the Roper Center is considered to be one of the earliest examples of an institution formalizing specific activities around data preservation and dissemination. Despite growth in the number of organizations dedicated to providing long-term access to research data assets, data curation is still early in its maturity as an established discipline. Data curation for reproducibility is an even more undeveloped area, with few individuals and groups actively engaged in the practice.

Those that have implemented data curation for reproducibility services can serve as models for academic libraries, data repositories, research institutions, and other groups planning to expand their services to support reproducibility. The three institutions highlighted below offer examples of how data curation for reproducibility services can be delivered.

Institution for Social and Political Studies, Yale University

The Institution for Social and Policy Studies (ISPS) was established in 1968 by the Yale Corporation as an interdisciplinary center at the university to facilitate research in the social sciences and public policy arenas. ISPS is an independent academic unit within the university, including affiliates from across the social sciences. ISPS hosts its own digital repository meant to capture and preserve the intellectual output of and the research produced by scholars affiliated with ISPS, and strives to serve as a model for sharing and preserving research data by implementing the ideals of scientific reproducibility and transparency.

Datasets housed in the ISPS Data Archive have undergone a rigorous ingest process that combines data curation with data quality review to ensure materials meet quality standards that support computational reproducibility. The process is managed by the Yale Application for Research Data workflow tool, which structures and tracks curation and review activities to generate high quality data packages that are repository-agnostic.

Cornell Center for Social Sciences, Cornell University

The Cornell Center for Social Sciences founded in 1981, anticipates and supports the evolving computational and data needs of Cornell social scientists and economists throughout the entire research process and data lifecycle. CCSS is home to one of the oldest university-based social science data archives in the United States that contains an extensive collection of public and restricted numeric data files in the social sciences with particular emphasis on demography, economics and labor, political and social behavior, family life, and health.

CCSS also offers a Data Curation and Reproduction of Results Service, R-squared or R2, where researchers with papers ready to submit for publication can send their data and code to CCSS prior to submission for appraisal, curation and replication. This is to ensure that published results are replicable; and that data and codes are well documented, reusable, packaged, and preserved in a trustworthy data repository for access by current and future generations of researchers.

Odum Institute for Research in Social Science, University of North Carolina at Chapel Hill

The Odum Institute for Research in Social Science at the University of North Carolina at Chapel Hill provides education and support for research planning, implementation, and dissemination. The Odum Institute hosts the UNC Dataverse, which provides open access to curated collections of social science research datasets, while also serving as a repository platform for researchers to preserve, share, and publish their data.

While the Odum Institute Data Archive has been curating research data to support discovery, access, and reuse since 1969, the archive has recently expanded its service model to include comprehensive data quality review. The Odum Institute provides this data review service to journals that wish to add a verification component to their data sharing policies. The Odum Institute model of curating data for reproducibility as a cost-based service to journals exemplifies a convergence of stakeholders around the principles of research transparency and reproducibility.

Discussion: CuRe Implementers

Visit the website for an organization below or any other organization that has implemented data curation for reproducibility services and/or workflows. Based on the information presented on the website, discuss the following:

- Overall mission of the organization

- How the service supports that mission

- Who within the organization provides the service

- The audience to whom the service is targeted

American Economics Association, Data Editor

https://aeadataeditor.github.io/aea-de-guidance/Certification Agency for Scientific Code and Data (cascad)

https://www.cascad.tech/CODECHECK, University of Twente

https://www.itc.nl/research/open-science/codecheck/Cornell Center for Social Sciences: Results Reproduction (R-squared) Service

https://socialsciences.cornell.edu/research-support/R-squaredInstitution for Social and Policy Studies, Yale University

https://isps.yale.edu/research/dataValidation by The Science Exchange

http://validation.scienceexchange.com/#/homeSmathers Libraries, University of Florida

https://arcs.uflib.ufl.edu/services/reproducibility/

Talking to others about the importance and benefits of curating for reproducibility can be intimidating when put on the spot, especially when asked to speak about it for the first time. Taking time to think through what services look like or could look like at your institution can go a long way to articulating your ideas effectively. Be strategic, have fun, and get others as excited with your understanding and commitment to reproducibility by meeting others where they are.

Exercise: Elevator Pitch

Using what you have learned about reproducibility, its importance and how the LIS profession is well situated to support researchers with curating for reproducibility, spend 5-10 minutes drafting an elevator pitch about piloting a service to your colleague, supervisor, or dean. What will you want to get across with just a few minutes of their time? As you draft your pitch consider the following:

- How does the service fit into your organization’s strategic plan?

- What value will it bring to the organization?

- Who are the stakeholders providing the service as well as receiving the service?

After you draft your pitch, practice saying it out loud a few times. The next time you have a chance to advocate for reproducibility, you will be ready!

Key Points

Data savvy librarians and other information professionals play an important role in supporting and promoting scientific reproducibility.

While LIS professionals already engage in many practices that support reproducibility, they may need to skill up to perform some critical curation for reproducibility tasks.

There are various models of data curation for implementation services. It is important to think about what a service might look like at your organization so that you can articulate your ideas effectively when given the opportunity.