The Data Quality Review Framework

Overview

Teaching: 0 min

Exercises: 0 minQuestions

What is data quality?

What is the relationship between curation and quality?

What is the Data Quality Review (DQR) Framework?

How does the DQR support scientific reproducibility?

Objectives

Explain the relationship between curation and quality

This episode continues the exploration of reproducibility standards taught in Lesson 1: Introduction to Curating for Reproducibility, but with a focus on quality criteria and the curation activities that can ensure that research compendia meet those criteria. Some of these curation activities may be familiar to those with data management and curation experience. However, considering these activities with reproducibility in mind as the primary goal of curation offers a new perspective on the importance of each and every task in the curation workflow, no matter how seemingly trivial. For curation activities that may be unfamiliar, thinking about how they fit into the context of reproducibility helps to appreciate the additional effort taken to obtain the knowledge and skills necessary to perform them.

What is Data Quality?

Great investment is often made to collect data for scientific research. It is essential that the data are of high quality so future users can trust and use the data. But what does it mean for data to be of “high quality”?

Quality data might be conceived as accurate, complete, or timely. Judgments about the quality of data are often tied to specific goals, such as authenticity, openness, transparency, and trust. Different stakeholders may prioritize different aspects of data quality, which may be in conflict with each other. For example, in the rush to produce and disseminate data quickly so that they meet timeliness requirements, the accuracy of the data may be compromised due to the lack of time needed to throughly inspect the data for errors.

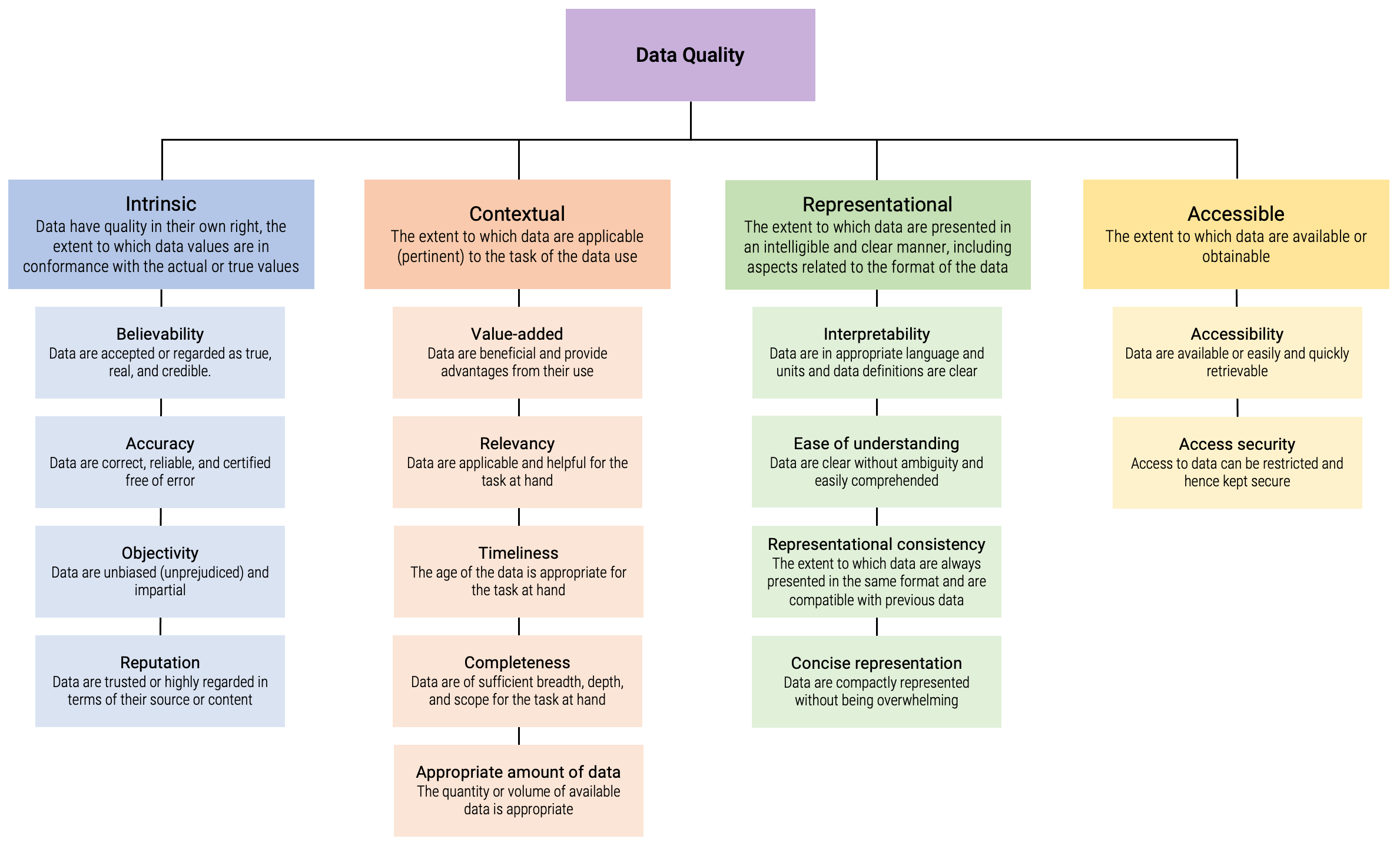

Wang and Strong (1996) defined data quality as having several dimensions, based on surveys of data users who were asked to select from a list of terms those that they perceived to be attributes of data quality. A follow up survey asked data users to then rank those attributes in order of importance to them. Below is the resulting list of 15 data quality attributes grouped into four primary categories.

Assessing quality can seem abstract when thinking about it as a list or hypothetically. The challenges below are tailored to further our understanding of data quality by spending some time with the data quality dimensions. The challenge relies on data quality categorizations defined by Wang and Strong (1996) that include Intrinsic, Contextual, Representational, and Accessible.

Exercise: Assessing Quality: Part 1

This group task is an opportunity for you to think about the different dimensions of data quality and the skills that may be required to assess quality. Instructor note: Consider using a whiteboard or online tool such as Google Jam Board, Etherpad, or similar for this group activity.

- Start by getting into pairs and review the quality dimensions.

- Group each of the data quality dimensions into 4 categories: Intrinsic, Contextual, Representational, and Accessible. In case of conflict, choose the best-fitting category for the dimension. All dimensions must be categorized.

- Your instructor will lead a discussion to establish that we all understand there are different categories of quality, that we are not always talking about the same thing when we talk about data quality, and that some aspects of quality conflict with others.

- If the groups had difficulty grouping the dimensions, discuss why it was so difficult.

Solution

Wang and Strong (1996) categorize data quality into four dimensions:

- INTRINSIC (believability; accuracy; objectivity; reputation) – Denotes that data have quality in their own right, the extent to which data values are in conformance with the actual or true values;

- CONTEXTUAL (relevancy; value-added; timeliness; completeness; appropriate amount) – The extent to which data are applicable (pertinent) to the task of the data user;

- REPRESENTATIONAL (interpretability; ease of understanding; representational consistency; concise representation) – The extent to which data are presented in an intelligible and clear manner, including aspects related to the format of the data (e.g., concise and consistent representation) and meaning of data (interpretability and ease of understanding); and

- ACCESSIBLE (accessibility; access security) – The extent to which data are available or obtainable.

It can be daunting, all the skills needed to do all this work and its many layers. Knowing what we don’t know can be incredibly liberating. Seeking out collaborators if the required skills are not part of your department can help create important relationships and remove the burden from others. Take some time to think about what skills will be needed to do this work and who you can collaborate with on your campus.

Exercise: Assessing Quality: Part 2

This group task is an opportunity for you to think about the different dimensions of data quality and the skills that may be required to assess quality.

- Get into groups of 4-6.

- Discuss the skills that might be necessary in order to assess particular dimensions and categories of data quality from The Assessing Quality: Part 1 challenge. What are the skills? Do you have them? Who in your organization does? Are there any tools you might use to help you? Are there other departments on campus to collaborate with on this?

- The groups report back.

- The instructor will collate reports on a whiteboard and facilitate a discussion about; a) how understanding data quality is a good first step for what we will be learning, b) what it is we will be learning in this lesson, and c) how what we will be learning will help us to solve some of the problems we are facing.

Solution

- Example skills, but not limited to: statistical analysis skills (stats programs for generating descriptive statistics, or more complex analyses if needed to vet submitted data), ability to identify PII/sensitive data both direct and indirect (and knowledge of best practices for redacting or transforming

- Example Departments but limited to: Statistics, various research support departments

The Data Quality Review (DQR) Framework

In the context of curation, it is useful to think about data quality not as much as attributes of the data, but as a “set of measures that determine if data are independently understandable for informed reuse” (Peer et al., 2014).

A data quality review (DQR) is a framework for organizing curation activities in the context of reproducible research. The review activities included in the DQR represent the recommended curation activities that enable a particular reuse of digital materials: The reproduction of reported results using the original materials.

The DQR framework focuses on the digital objects that comprise computation-based scientific claims and, importantly, their interaction. Unlike data curation, which focuses on the dataset as a digital object, DQR is applied to the computation process, and its component parts, that generates the scientific claims.

The DQR combines gold-standard data curation practices and independent reproduction of computation that underlies reported scientific claims.

Spotlight: The DQR and Other Curation Frameworks

The Data Quality Review framework specifically targets computational reproducibility, but it can be used alongside other frameworks, checklists, and tools that support curation, such as the CURATED framework developed by the Data Curation Network (DCN).

The DQR in Practice

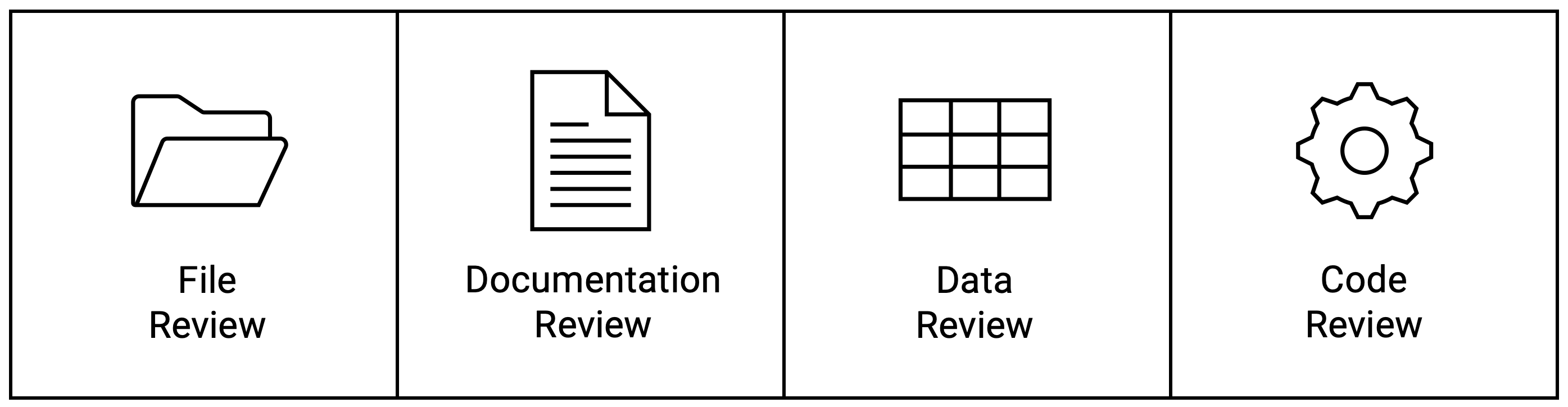

The DQR is a process whereby data and associated files are assessed and required actions are taken to ensure files are independently understandable and reusable. This is an active process, involving a review of the files, the documentation, the data, and the code.

The DQR involves a review of the files in the compendium, of the documentation that accompanies the files, and in-depth review of the data and the code (see separate episodes in this lesson for each). These review categories and their associated tasks do not necessarily happen in a linear fashion.

Spotlight: Committing to Data Quality Review

An article published in the International Journal of Digital Curation in 2014 explains what a data quality review entails, who is positioned to carry out such a review, and what it means to commit to such a review. The article examines the review practices of three domain-specific data archives and three general data repositories, finding that general data repositories fall short of full data quality review and that long-standing data archives, particularly in the social sciences, implement many review activities, but typically not code review.

The article argues that commitment to data quality review is a community effort, involving researchers, academic libraries, scholarly journals, and others: “the stakeholders and caretakers of scientific materials, such as data and code, must share the responsibility of meeting the challenges of data quality review in order to ensure that data, documentation, and code are of the highest quality so as to be independently understandable for informed reuse, in the long term. The commitment to data quality review, however, has to involve the entire research community.”

Peer, L., Green, A., & Stephenson, E. (2014). Committing to data quality review. International Journal of Digital Curation, 9(1). http://doi.org/10.2218/ijdc.v9i1.317

Discussion: Food for Thought About Review

Roger Peng, an advocate of reproducible research, argues that articles that have passed the reproducibility review ‘convey the idea that a knowledgeable individual has reviewed the code and data and was capable of producing the results claimed by the author. In cases in which questionable results are obtained, reproducibility is critical to tracking down the “bugs” of computational science.’

Peng, R.D. (2011). Reproducible research in computational science. Science, 334 (6060), 1226-1227. https://doi.org/10.1126/science.1213847

Questions for discussion:

- Is this something that researchers want?

- What happens when you find a mistake?

- Who is in the best position to do such a review?

- How does the labor of review figure into the research process as a whole, and authorship in particular?

- How do disciplinary norms play into this?

The Object of Review: The Research Compendium

When we talk about computational reproducibility, we necessarily include more than one file. At minimum, we need a computer script and input data. Let’s recall what our goal is here: to find out whether data and code are available and sufficient to produce the same results, and whether the programs run. But we also want to ensure that files are independently understandable for informed reuse.

The four basic components of a reproducibility package are:

- Data

- Code

- Documentation (e.g., README, codebook)

Other materials that may be included:

- Code output matrix

- Comparison output

- Treatment materials

Spotlight: The Whole is Greater Than the Sum of Its Parts

Do we care about the package as a whole or about its constituent parts? That depends on what you want to do with it. Some users may want to use or examine only one component of the reproducibility package, the data, for example. Others prefer a frictionless process whereby they can test the package as a whole, for example, via ‘Push-Button Replication’ (see this project from 3ie: https://www.3ieimpact.org/our-expertise/replication/push-button-replication).

The Process of Review

The review process is often iterative, not linear. That is, data review may require that a script be run to apply labels. Inspecting the code can shed light on additional necessary files that you missed in the file review. Code can produce a codebook, modifying your documentation review. And, during code review, comparing the output to the manuscript may require modifications to earlier conclusions during the code inspection.

As a curator, you need to be able to work iteratively and to track your process. You may also likely need to interact with authors to resolve any lingering or unresolved issues.

Spotlight: Curation is Not a Linear Process

It is important to note that curation work in general, and curation frameworks in particular, do not necessarily prescribe a strict workflow or sequence. Procedures may vary depending on the materials (e.g., the data), on curator skills, on policy, on data creator feedback, and more. The key is that all curation activities should be performed before a review is complete.

Keeping Track of the Review and Communication with the Researcher

There will be some communication with authors about missing information. Organizations that take on DQR should think through some key issues such as:

- What are the mechanisms for keeping track of where you are in the review? Any problems you find before accepting the submission?

- How to set standards for what to send back to researchers and what to accept?

- How are we handling communication with researchers? How to handle delayed responses from researchers?

Spotlight: Curation Process Management

The curation workflow has many steps and can involve several stakeholders. Managing the curation workflow and keeping track of curation activities can quickly become challenging. A few recent approaches attempt to address the challenges of managing and tracking the curation process, which will also help standardize the process. Here are some examples:

Checklist: The CURATE(D) steps are a teaching and representation tool. This model is useful for onboarding data curators and orienting researchers preparing to share their data. a standardized set of C-U-R-A-T-E-D steps and checklists to ensure that all datasets submitted to the Network receive consistent treatment (DCN, 2018).

Data Curation Network (2022). CURATE(D) Steps and Checklist for Data Curation, version 2. http://z.umn.edu/curate.

Software: The Yale Application for Research Data (YARD) is an adaptable curation workflow tool that enhances research outputs and associated digital artifacts designated for archival and reuse. By tracking curation tasks, YARD supports a transparent and documented workflow that can help researchers, curators, and publishers share responsibility for curation activities through a single pipeline.

Peer, L., & Dull, J. (2020). YARD: A Tool for Curating Research Outputs. Data Science Journal, 19(1), 28. http://doi.org/10.5334/dsj-2020-028

The remaining Episodes in this Lesson will outline the recommended curation activities for performing file, documentation, data, and code review.

Key Points

It is essential that the data are of high quality so future users can trust and use the data.

There are many different dimensions of data quality, and different stakeholders may prioritize different ones.

Reproducibility–the ability to reproduce original analysis and results–sets an even higher bar for quality.

The Data Quality Review (DQR) framework is a set of recommended curation activities that promote the reproducibility of the research.

DQR involves a review of the files, the documentation, the data, and the code being curated.

File Review

Overview

Teaching: 0 min

Exercises: 0 minQuestions

Why is file review important for reproducibility?

What curation activities are associated with file review?

Objectives

Understand why it is important to review deposited files

Recognize file review best practices

In this episode, we unpack the Data Quality Review Framework, focusing on File Review. Other episodes in this lesson elaborate on Documentation, Data, and Code Review.

File Review in Practice

File review is an important part of the Data Quality Review (DQR) covered in the previous episode. This episode will outline best practices to ensure the integrity of files in the reproducibility package.

Data reuse relies on clearly identified, functional, and long-term accessible files. Tasks associated with file review include inspecting the files and identifying file format-specific curation tasks, creating persistent identifiers and metadata, and creating preservation file formats. Preservation-oriented steps, such as implementing a migration strategy for file formats, and ongoing bit monitoring, are also part of file review.

File review is especially important as a preparation for packaging the files for deposit into a trustworthy repository for long-term access and sharing (see Lesson 4: Compendium Packaging).

File Inspection

Files must be inspected to ensure that they are all present and correctly named, and that they open. File sizes and formats may be recorded and checksums created.

File inspection tasks may include the following:

- Review list of files to verify that they are all present

- Check files for viruses using anti-virus software

- Open each file to verify that it opens as expected and is not corrupt or inoperable

- Verify the content of each file matches the expected format

- Ensure that file names conform to any applicable naming convention, and that they are internally consistent if one file references another by name

- Identify any missing metadata that the depositor had been expected to submit

File Format-specific Curation Tasks

Some aspects of file review may vary based on the file format or subject domain. The Data Curation Network Primers have helpful curation process recommendations for particular file formats.

Spotlight: Data Repository Metadata

Data curation involves the creation of metadata at both the file level and the study level to facilitate further data quality review and discoverability. Required metadata fields vary by repository, but should be mapped to standard metadata schemas for preservation and interoperability.

Metadata librarians or other metadata experts on staff may create a Metadata Application Profile for the data repository that data curators refer to to know what metadata to create for each deposit they curate.

Here are some resources for identifying metadata fields to include:

Verify and Enhance File-Level Metadata

Verify and enhance metadata associated with each individual file. Metadata-related tasks may include:

- Verify any file-level metadata provided by the depositor

- Create or enhance any necessary file-level metadata, such as:

- File format or resource type

- File size

- Author/Creator/Source

- Rights

- Persistent Identifier

- Checksum

- Last verified date

Spotlight: Fixity in Digital Preservation

Fixity, in the preservation sense, means the assurance that a digital file has remained unchanged, i.e. fixed. Fixity helps establish trust between data producers, stewards (e.g. repositories and archives), and users.

Fixity checking is the process of verifying that a digital object has not been altered or corrupted. A checksum on a file is a ‘digital fingerprint’ used to detect if the contents of a file have changed. Checksums can be generated using a range of readily available and open source tools.

See more information here:

Code File Metadata

Curators may want to enhance the metadata for code files, which includes some specific fields. For example,

- Date the code was last updated

- Date the code was last executed

- Code citation with DOI

- Code license

- Character encoding

- Software, version, and operating system information

Spotight: Software Metadata

If the research compendium includes software, curators may add or enhance associated metadata. The Software Metadata Recommended Format Guide (SMRF) summarizes and defines the metadata elements recommended by the Software Preservation Network to describe software materials in the context of a wide range of collections.

Christophersen, Allan. Colón-Marrero , Elena. Dietrich, Dianne. Falcao, Patricia. Fox, Claire. Hanson, Karen. Kwan, Allen. McEniry, Matthew. (2022, February 8). Software Metadata Recommended Formats Guide. Software Preservation Network. https://www.softwarepreservationnetwork.org/smrf-guide/

Transform or Migrate to Preservation Formats

Files that are not in a preservation format (e.g., .csv, .txt, .pdf), and do not have a built-in converter, should be manually converted to a preservation format. Transforming files into non-proprietary formats facilitates potential reuse and ensures the files remain accessible long term. For files from proprietary statistical software (e.g. .dta, .sav, .do, and others), both a converted preservation format file and the original file may be included in the reproducibility package.

Spotlight: Preservation Formats

Some resources for identifying recommended preservation formats:

- Library of Congress Recommended Formats

- Cornell eCommons’ recommended file formats and probability for long-term preservation matrix

- OpenAIRE Data formats for preservation

- National Archives Tables of File Formats

- UK Data Service Recommended formats

- UK National Archives

Verify and Enhance Study-Level Metadata

Verify and enhance metadata to be applied at the level of the study. Metadata-related tasks may include:

- Verify any study-level metadata provided by the depositor

- Create or enhance any necessary study-level metadata, such as:

- Creator, creator affiliation, ORCID or researcher unique identifier

- Title

- Description

- Language

- Publication Date

- Rights & License

- Temporal coverage, spatial coverage

- Funding Source

- Citations to related work

- Create a study-level citation

- Create the catalog record and assign it a persistent identifier

Study Mnemonic

Following good data management practice, researchers would have created a project folder for the study that contains all the files used in the study. The name of the project folder should be unique to identify the particular set of files. This study mnemonic is also useful for reviewers who can use it to tag communications with authors and as codebook identifiers in archives.

An example reproducibility package done by CISER’s Results Reproduction Service team with study mnemonic (“R2-2019-MEEMKEN-1“) used as Codebook Identifier and e-mail subject tags to track communications with authors: https://archive.ciser.cornell.edu/reproduction-packages/2828.

Reviewing deposited research files quality can seem abstract when thinking about it hypothetically. The exercise below is tailored to further our understanding of file review by spending some time with example files. The exercise asks you to identify problems with the files and suggest resolutions. The Solution copy of the example files shows ways of resolving the issues.

Exercise: File Review Challenge

Review the example files for inclusion in a research compendium. Make recommendations to resolve any issues. In real life, you would communicate with the researchers to resolve any missing files or problematic issues you found.

- The depositors submitted a file list document: 2018-Kim-Documentation.docx. Are all files present?

- The study mnemonic “2018-Kim” will be used for this example. Do the files follow the appropriate naming convention?

- Do all the files open? If not what is the problem?

- Record file sizes. Does anything look off?

- What study-level metadata could be suggested based on these files?

- Should any files be converted to a different file format for preservation?

Solution

Are all files present?

- The file 2018-Kim-anonymized_participants.xlsx, listed in 2018-Kim-Documentation.docx is missing.

Do files follow the appropriate naming convention?

- One file “analysis_final.do” disobeys the convention.

Do all the files open?

- One file 2018-Kim-Acknowledgements.xlzx has a typo in the file extension that prevents it from opening.

- One file is a .dta file, which requires Stata

- The .do file is run in Stata, but can be viewed in any text editor

Record file sizes

- One file 2018-Kim-questionnaire.txt is 0 Kb.

Suggest study-level metadata

- Dc.creator = Kim, Hyuncheol Bryant

- Dc.title = Reproduction Materials for: The Role of Education Interventions in Improving Economic Rationality

- Dc.language = english

- Dc.language.iso = eng

- Dc.creator.orcid = 0000-0001-5304-0274

- Dc.relation.isreferencedby = Choi, Syngjoo, Kim, Hyuncheol Bryant, Kim, Booyuel, et al. “The role of education interventions in improving economic rationality.” Science. Volume 362, Issue 6410 (2018-10-05): 83-86. https://doi.org/10.1126/science.aar6987.

Recommend a preservation format for each file

- .xlsz → .csv

- .docx → .txt

- .dta → .csv

- .do → .txt

- For proprietary file formats, it is best practice to include both a preservation format version and the original proprietary format version of the file in the reproducibility package. E.g. include both the submitted .dta file and a .csv version

Key Points

Files must be inspected.

Study and file metadata should be detailed, accurate, and formatted in a standard schema.

Aspects of file review may vary based on file format.

Transforming files into non-proprietary formats facilitates reuse and ensures long term accessibility.

Documentation Review

Overview

Teaching: 0 min

Exercises: 0 minQuestions

Why is documentation important for reproducibility?

What types of documentation are necessary to support reproducibility?

What curation activities are associated with documentation review?

Objectives

Understand why it is important to review deposited documentation, and create additional documentation.

Recognize documentation and documentation review best practices.

In this episode, we unpack the Data Quality Review Framework, focusing on Documentation Review. Other episodes in this lesson elaborate on File, Data, and Code Review.

Documentation Review in Practice

Documentation supporting the use of data must be comprehensive enough to enable others to explore the resource fully, and detailed enough to allow someone who has not been involved in the data creation process to understand how the data were collected (Digital Preservation Coalition, 2008, p. 25).

This review step includes a review of the contextual documentation about the files, an assessment of whether the descriptive information about the files and about methods and sampling is comprehensive and sufficient for independent informed reuse, and the application of corrective actions where this information is missing, including creating documentation compliant with community standards and linking all other known related research products (e.g., publications, registries, grants) (Peer et al., 2014).

Documentation Requirements

In addition to metadata, covered in Episode 2: File Review, robust stand-alone documentation is needed. This includes a README File, a Codebook or Data Dictionary, as well as other contextual Documentation.

Documentation for Reproducibility

“Documentation for data analysis workflows can come in many forms, including comments describing individual lines of code, README files orienting a reader within a code repository, descriptive commit history logs tracking the progress of code development, docstrings detailing function capabilities, and vignettes providing example applications. Documentation provides both a user manual for particular tools within a project (for example, data cleaning functions), and a reference log describing scientific research decisions and their rationale (for example, the reasons behind specific parameter choices).”

Stoudt, S., Vásquez, V. N., & Martinez, C. C. (2021). Principles for data analysis workflows. PLoS Computational Biology, 17(3), e1008770. https://doi.org/10.1371/journal.pcbi.1008770

README File

One of the key elements to successfully reproduce a study is clear instructions. A README file fulfills the author’s responsibility to include key information about the study (see this README template).

Review of a README file includes verification that key information is included and suggests areas for remediation if it is not. When it comes to information about reproducibility, a README file should also include information to provide context for the computation. This covers the following identifying information about the study and the files.

- GENERAL INFORMATION

- Title of the study

- Authors and institutional affiliation including ORCID

- Corresponding author contact information (e-mail, address, phone number)

- Date published or finalized for releasePublisher, issue, and DOI (if published)

- Date of data collection

- Geographic location

- Information about funding sources that supported the collection of data

- Overview of the data (similar to an abstract but about the dataset)

- SHARING/ACCESS INFORMATION

- Licenses/restrictions on the data also referred to as Data Availability Statement or Data Access Statement, signposts where the data associated with a paper are available, and under what conditions the data can be accessed, including links (where applicable) to the data set.

- Links to publications that cite or use the data (current manuscript as well as previous if any)

- Source if data was derived from another source

- Terms of use

- DATA & FILE OVERVIEW

- File list

- Filename (for each file in dataset)

- Short description (for each file in the dataset - what is this file)

- Relationship between all the files (how they work together)

- File list

- METHODOLOGICAL INFORMATION

- Description of methods used for collection/generation of the data

- Methods for processing the data(how the submitted data were generated from the raw or collected data)

- Instrument or software specific information needed to interpret the data (include version, and the year of the software, additional libraries and packages required to run the analysis). If the code takes more than a few minutes to run, consider adding an estimated time “Code takes 37 minutes to run) and the date it was last run successfully. Over time, some commands may not work because of updates made to the software or packages installed. Thus, it is very important to provide details about the computing environment at the time the researchers did their analysis so researchers have information to work on to help in rebuilding the environment should the program files no longer work in the future.

- Description of any quality-assurance procedures performed on the data.

- DATA-SPECIFIC INFORMATION FOR: [FILENAME] Create sections for each file of the dataset

- Number of variables

- Number of cases/rows:

- Missing data code/ definition

- Variable list

- Variable name (for each variable):

- Description (description of the variable) (value labels if appropriate)

- COMPUTATIONAL ENVIRONMENT INFORMATION

- Specify in detail the computing environment you have used to run your analysis:

- Operating System and version (e.g., Windows 10, Ubuntu 18.0.4, etc.).

- Number of CPUs/Cores.

- Size of memory.

- Statistical software package(s) used in the analysis and their version.

- Packages, ado files, or libraries used in the analysis and their version.

- Specify the file encoding if necessary. Example: UTF-8.

- Estimate the time to complete processing (from beginning to end) using this computing environment.

- Specify in detail the computing environment you have used to run your analysis:

Remember to keep the information and instructions succinct.

Resource: README File

See this resource for comprehensive guidance on writing a README file, including a list of recommended content, formatting standards, and a README document template.

Cornell University Research Data Management Service Group. (n.d.). Guide to writing “readme” style metadata. https://data.research.cornell.edu/content/readme

Data Availability Statement

It is possible that a research compendium may not include data due to data provider restrictions. For others to find, access, and use the data to reproduce results, a Data Availability Statement should include information about where to download the files, what to name them, and where to put them. For example: “Download this

The Data Availability Statement should include the following essential elements:

- Name of Data Provider

- Title of Dataset

- Location or url of dataset

- License of dataset

- Date data was accessed

- Data citation (if available)

- Detailed instructions on how to gain access to the data (especially for restricted data)

- URL to Data Provider instructions if available

- Contact information of person with authority to grant access to data (if necessary)

- Name to assign the data (if has to be renamed) and where to put them in the reproducibility package, so it can be properly read by the code.

- Other notes

Some examples of Data Availability Statements: Taylor and Francis, Springer Nature

Data Availability Statements can seem abstract when thinking about them hypothetically. This exercise is tailored to further our understanding of these statements by spending writing brief ones for different scenarios.

Exercise: Data Availability Statement Exercise

Write a data availability statement for the following:

- Data openly available in a public repository – (link to example)

- Data subject to third-party (data provider) restrictions – link to example

Solution

Published and public access data

The data that support the findings of this study are openly available in [repository name] at http://doi.org/[doi], reference number [reference number]. Date accessed by the authors [dd-mmm-yyyy]. Download the, use the default name, and put it in the of the reproducibility package. Published, but with third-party restrictions

The data that support the findings of this study are available [from] [third party]. Restrictions apply to the availability of these data, which were used under license for this study. Data are available [from the authors] at [URL] with the permission of [third party]. Date accessed by the authors [dd-mmm-yyyy]. Download the, use the default name <or rename it to …>, and put it in the of the reproducibility package.

Codebook/Data Dictionary

A codebook or data dictionary is critical to the interpretation of data and output files. According to the ICPSR, a codebook generically includes information on the structure, contents, and layout of a data file.

Codebooks accompanying social science data typically include the following information about each variable,

- Variable name and label. For example, “g2004: Voted in the general elections of 2004.”

- Value labels. A clear label to interpret each of the codes assigned to the variable. For example, “g2004: 1=yes, 0=no.”

- The exact question wording or the exact meaning of the datum. Sources should be cited for questions drawn from previous surveys or published work. For example, “q2: political leaning (exact Q wording: “Do you lean more toward the Democratic or Republican party?” source: ANES)”

- Location in the data file. Ordinarily, the order of variables in the documentation will be the same as in the file; if not, the position of the variable within the file should be indicated.

- Missing data codes. Codes assigned to represent data that are missing. Different types of missing data should have distinct codes. For example: “g2004: 9=system missing.”

- Universe information, i.e., from whom the data was actually collected. If this is a survey, documentation should indicate exactly who was asked the question. If a filter or skip pattern indicates that data on the variable were not obtained for all observations, that information should appear together with other documentation for that variable.

- Imputation and editing information. Documentation should identify data that have been estimated or extensively edited.

- Details on constructed and weight variables. Datasets often include variables constructed using other variables. Documentation should include “audit trails” for such variables, indicating exactly how they were constructed, what decisions were made about imputations, and the like. Ideally, documentation would include the exact programming statements used to construct such variables. Detailed information on the construction of weights should also be provided.

- Variable groupings. For large datasets, it is useful to categorize variables into conceptual groupings.

Resource: Creating a Codebook

See this document on codebooks: Stephenson, Libbie, 2015, “About_Codebooks.rev072015.pdf”, All About Codebooks, https://doi.org/10.7910/DVN/U5FWYL/KLY4KT, Harvard Dataverse, V1

Some statistical software can generate a codebook from the data file. For example,

- Stata https://www.stata.com/manuals/dcodebook.pdf

- R https://cran.r-project.org/web/packages/codebook/index.html

- SPSS (great resource from Kent State) https://libguides.library.kent.edu/spss/codebooks

Other Contextual Documentation

To help determine if data are “independently understandable for informed reuse,” other documentation may be helpful. Such contextual documentation helps inform the scholarly record. Some examples include the following.

Survey data

- Interview schedules

- Interviewer and coder instructions (e.g., instructions for humans who are classifying open-ended survey responses, see Pew Research Center example).

- Screening forms

- Call-report forms

- Consent form language

- Other disclosure requirements, see for example, the American Association for Public Opinion Research (AAPOR) disclosure standards

Experimental data

- Electronic copies of materials used to administer the intervention (treatment). For example, mailings, transcripts of robo-calls, summary of curriculum, TV ads, audio files (see ISPS example).

- Randomization procedure. The instructions should be presented in a way that, together with the design summary, conveys the protocol clearly enough that the design could be replicated by a reasonably skilled experimentalist.

- Informed Consent Statement.

- Pre-Analysis Plan. A document that formalizes and declares the design and analysis plan for the study. See EGAP’s 10 Things to Know About Pre-Analysis Plans.

Administrative data

- Data collection forms for transcribing information from records.

Utilizing the knowledge we have accumulated in Lesson 2, let’s put this theory into practice by interacting with existing data and its codebook. Digging in and interacting with real data and codebooks is the best way to familiarize yourself with the many possibilities. While there may not be one size fits all, there are various ways to include better practices by being proactive to what future users of data need to know to successfully reuse the data.

Title

See data and codebook made available via OpenICPSR.

[check if codebook is complete; potentially use the same dataset as the one in the README exercise]

Seltzer, Andrew J. Union Bank of Australia, Data and Codebook. Ann Arbor, MI: Inter-university Consortium for Political and Social Research [distributor], 2020-02-28. https://doi.org/10.3886/E117971V1

Solution

solution

Key Points

Study Identification is critical to ensure re-users are able to use the correct reproducibility package, contact study authors, and locate files and communications related to the study.

Keep the instructions succinct.

Using relative paths in your codes and a master file to execute them helps in simplifying the instructions.

Information about the computing environment and the date the analysis was last run are crucial to rebuild the environment should the need arise.

The Data Availability Statement should be clear enough for researchers to be able to access the same data used in the analysis and apply the same names used in the code so that the data may be called properly.

A codebook or data dictionary is critical to the interpretation of data and output files.

Data Review

Overview

Teaching: 0 min

Exercises: 0 minQuestions

Why is data review important for reproducibility?

What curation activities are associated with data review?

Objectives

Understand why it is important to review deposited data

Recognize data review best practices

In this episode, we unpack the Data Quality Review Framework, focusing on Data Review. Other episodes in this lesson elaborate on File, Documentation, and Code Review.

Data Review in Practice

A data quality review also involves some processing – examining and enhancing – of the data. These actions require performing various checks on the data, which may be automated or manual procedures. It often requires opening the data file with the appropriate software.

The United Kingdom Data Archive (UKDA) provides a comprehensive list of things to check: ‘double-checking coding of observations or responses and out-of-range values, checking data completeness, adding variable and value labels where appropriate, verifying random samples of the digital data against the original data, double entry of data, statistical analyses such as frequencies, means, ranges or clustering to detect errors and anomalous values, correcting errors made during transcription’ (UKDA, n.d).

In addition, data need to be reviewed for risk of disclosure of research subjects’ identities, of sensitive data, and of private information… and potentially altered to address confidentiality or other concerns.” (Peer et al., 2014)

Reviewing deposited data can seem abstract when thinking about it hypothetically. The exercises throughout this episode are tailored to further our understanding of data review by spending some time with example files. The exercises ask you to identify problems with the data and suggest resolutions. The Solution copies of the example data highlight issues and show ways of resolving them.

Variables and Values

Confirm that all variables in the data files contain variable and value labels. Look for ambiguous variable labels, and clarify the units of measurement for the variables. Clarify what the use of “blanks” means for this data.

Confirm that the variable and value labels are consistent across metadata and other associated files. For each variable, review the documents for metadata about that variable and ensure the metadata exists and is consistent. Confirm also that any recoded or transformed variables are properly described in metadata and other associated files.

Exercise: Variables and Values

Review the sample questionnaire, codebook, and data file.

- Do all the variables have labels?

- Are there discrepancies between variables or values in the data and other associated files?

Solution

Do all the variables have labels?

- One column of the .csv data has a missing label (should be: GENDER)

Are there discrepancies between variables or values in the data and other associated files?

- Gender variable: data has values ‘nonbin’, while codebook and questionnaire indicate the value was recorded as ‘nonbinary’

- Gender = ‘other’ occurs 3 times in the data, but codebook indicates its frequency is 0

Dataset Errors

Data must be examined for inconsistencies and errors.

Observation counts

Confirm that the number of observations in the data file matches the number of observations the study claims to have had, either in published articles or associated files such as the codebook or readme.

Out of range or wild codes

Identify any out-of-range or wild codes (response codes that are not authorized for a particular question or variable)

Missing values and other errors

Identify any missing (undefined null) values or other potential errors in the data. Check for invalid values, or for metadata that incorrectly specifies the constraints for a variable. Be sure no data look double-entered, or incorrectly transcribed.

It can help here to compare a sample of the data to any original data files, including physical ones, if data has been copied over or transcribed. Review summary statistics to verify that they fall within the expected values for the variable.

Familiarizing oneself with common errors is a strategic method that can be integrated into workflow processes. Understanding key areas to concentrate on can offset some of the more laborious parts of the work. The more we do, the more confident we can become which can lead to more efficiency. Let’s get more familiar by engaging in the next challenge.

Exercise: Dataset Errors

Review the sample study article, codebook, and data file.

- Are there any observation count discrepancies?

- Are all the data in bounds for each variable?

- Are there undefined null values or other errors?

Solution

Are there any observation count discrepancies?

- The data has 2810 rows of observations, while the codebook and published article indicates observations were collected from 2812 participants

Are all the data in bounds for each variable?

- Some of the ages listed are 0, 100, 200, and are clearly out of bounds (participants are in high school)

Are there undefined null values or other errors?

- The Form data field has text values and blank values, when the codebook indicates it should be numeric and only values 1 or 2

Personally-Identifiable Information (PII)

If a research compendium is being prepared for public access, and human subjects data is present, identify any variables that contain data that could identify study participants.

Two kinds of variables could endanger research participants’ confidentiality: direct identifiers and indirect identifiers.

Direct identifiers are variables that point explicitly to particular individuals or units, such as names, addresses, and identifying or linkable numbers (e.g. student IDs, Social Security numbers, driver’s license IDs, etc.). Any direct identifiers should be removed or masked prior to depositing.

Indirect identifiers are variables that can be used together or in conjunction with other information to identify individual respondents. Examples include detailed geographic information, exact job, office or organization titles, birthplaces, and exact event dates. Indirect identifiers may be re-coded in various ways, such as converting dates to time intervals, and geographic codes to broader levels of geography.

Spotlight: Removing PII

Remove or anonymize any personally identifiable information in the data files.

Removing PII from a data file may be a manual process, or could involve either using statistical software (e.g., Stata, R) to edit or write new code, or running a program/script that deletes or otherwise transforms these variables and writes out a new revised version of the data file, and adding the resulting data file to the catalog record (as well as any new code file).

There are times when data authors are too close to their data and not able to easily see PII when it is time to share their data widely. The following challenge helps us think about how the nuances of a variable can lead to PII and the workarounds that curators can assist with to allow for sharing the data. Knowing these better practices and advocating for them with data authors will allow data to be shared which may not have been if the data was not de-identified.

Exercise: Anonymizing Data

Review the example data file: 2018-Kim-dataset_final.csv.

- Which information counts as personally identifiable information (PII)?

- How would you anonymize the PII in this file?

Solution

Which information counts as PII in the example file?

- Name, Address

How would you anonymize any personally identifiable information in the example data file?

- Name → redact this column

- Address → transform to City or Country only

Key Points

Data must be checked for missing or ambiguous variable and value labels.

Observation counts should be consistent across files.

Data values should be in range, and error-free.

Metadata and references to the data in associated files should be checked for consistency and accuracy.

PII should be identified and anonymized or removed.

Clear metadata about who created, owns, and stewards the data, and what the licensing terms are for reuse, should be created.

Code Review

Overview

Teaching: 0 min

Exercises: 0 minQuestions

Why is code review important for reproducibility?

What curation activities are associated with code review?

Objectives

Basic understanding of what is involved in code review

Providing examples of common code issues when attempting computational reproducibility

In this episode, we unpack the Data Quality Review Framework, focusing on Code Review. Please note that Episodes 2-4 (File, Documentation, and Data Review) in this lesson may include material familiar to many data curators. In this Episode 5 on Code Review, we introduce the additional curation activities that specifically involve code. The work done in Lesson 2 can be considered the pre-work necessary to continue the work in this Episode.

Lesson 3: Reproducibility Assessment is entirely dedicated to the practice of code review in support of reproducible research.

What is Code?

In the context of this lesson, code refers to the computer code necessary to perform data-related operations such as cleaning, transforming, or analyzing. Related, a script refers to a collection of code, usually pertaining to a particular step in the data analysis, for example.

Code files commonly used in research include the following extensions: .do, .R, .Rmd, jupyter, jl, py.

“Code in data-intensive research is generally written as a means to an end, the end being a scientific result from which researchers can draw conclusions [e.g., gain statistical insight into distributions or create models using mathematical equations]. This stands in stark contrast to the purpose of code developed by data engineers or computer scientists, which is generally written to optimize a mechanistic function for maximum efficiency.” Stoudt, S., Vásquez, V. N., & Martinez, C. C. (2021). Principles for data analysis workflows. PLoS Computational Biology, 17(3), e1008770. https://doi.org/10.1371/journal.pcbi.1008770

See the examples of scripts below.

Data generating, cleaning, or transformation:

//Labeling variables

label variable treatment "The manipulation group"

label define treatment1 1 "$4" 2 "$"

label variable treatment treatment1

label variable pieces "How many pieces of pizza did you eat today?"

label define ideolab -3 "V. Lib." -2 "Lib." -1 "S. Lib." 0 "Middle" 1 "S. Cons." 2 "Cons." 3 "V. Cons." 4 "" 5 "DK/Can't place"

//recoding variables

recode region (1/2=1 "Northeast") (3/4=2 "Midwest") (5/7=3 "South") (8/9=4 "West"), gen(region_4cat)

//generating variables

generate gp100m = 100/mpg

label var gp100m "Gallons per 100 miles"

Data analysis:

tab price foreign, chi2

reg price mpg foreign weight

Create tables and figures:

#########

#########TABLE 2: Cross tabulation of unfairness views and racial resentment

#########

plotdata <- cbind(100*prop.table(table(survey$unfair_my[survey$race_1==1],survey$res_fav2[survey$race_1==1])),

100*prop.table(table(survey$unfair_my[survey$race_1==1],survey$res_dis2[survey$race_1==1])))

rownames(plotdata) <- c("Not Unfair to Own Race","Unfair to Own Race")

colnames(plotdata) <- c("Not Resentful","Resentful","Not Resentful","Resentful")

print(xtable(plotdata, digits=0,label="tableres", caption="Among white respondents, the incidence of unfairness perceptions and two common measures of racial resentment. See footnote \\ref{questionwording} for question wording."),

floating = TRUE, file="table2.tex")

Saving or logging:

log using stata_exercise.log, replace

Why Review Code?

The term code review comes from software development, where it is common and integral practice (see Google documentation on how to conduct a code review). Code review refers to a systematic approach to reviewing other programmers’ code for mistakes and other quality metrics. The review of one’s code is conducted by a team member who typically assesses whether requirements are included, how readable the code is, whether it conforms to a specific style, etc. In this context, the goal of code review is to improve the overall code health over time. Other benefits of code review are sharing knowledge so that no single developer is the “critical path” and helping facilitate conversations about the code base.

In the context of curating for reproducibility, the primary reason to review code is that non-reproducibility is a threat to the scholarly record. It is not uncommon in labs that analysis scripts or software are written by a postdoc or graduate student. Often, the code is elaborated over time and can become quite cumbersome (e.g., over 30K lines of code in multiple files) and complicated such that only the original postdoc can make changes and add features. It is inevitable that research teams grow and change and original authors cannot be reliable sources of information or explanations when something goes wrong.

Another reason is methods transparency: Transparent sharing of data and results is the first fundamental step to open research. How the data are analyzed to reach results should be just as transparent. Code not only performs operations on the data, it also serves as a log of analytical activities, allowing future users to understand any unintentional errors or mistakes.

In addition, considering that, in general, code is read more often than it’s written, we may want to review code because it reflects a particular or new way of thinking about a problem. Code communicates research decisions, methodologies, and results. It reveals a mindset or approach, and is more than just something that happened to work. Reviewing the code in detail not only helps ensure that it will be functional, but also that it can be applied to other data or to a common pattern encountered elsewhere.

Code Review in Practice

“Similar to data files, code files should also be subject to examination and potential enhancement to provide transparency and enable future informed reuse. A data quality review requires that code is executed and checked, that an assessment is made about the purpose of the code (e.g., recoding variables, manipulating or testing data, testing hypotheses, analysis), and about whether that goal is accomplished.” (Peer et al., 2014)

Discussion: Who Should Conduct a Code Review?

Before turning to the practice of code review, it might be a good time to pause and ask,

- Who is responsible for verifying reproducibility? And, more generally, who is responsible for the quality of research data and code deposited in repositories? Consider the Pros and cons of the following options:

- Original author(s)

- Informal peer network (e.g., collaborator, friend in the department)

- Formal peer network (e.g., peer reviewers, third party verifiers)

- Archive and repository staff

- Other?

- Will peer code review discourage scientists from sharing code?

Lesson 3: Reproducibility Assessment of the Curating for Reproducibilty Curriculum is entirely dedicated to the practice of code review in support of reproducible research.

Key Points

Difficulties with executing code can prevent computational reproducibility.

Researchers should write code with reproducibility in mind.

Curators reviewing the code can take steps to ensure that it is executable and documented to facilitate reproducibility.