Compendium Packaging

Overview

Teaching: 0 min

Exercises: 0 minQuestions

Why is it important to package research artifacts into a compendium?

What are best practices for packaging files into the research compendium?

How does the TIER Protocol help with compendium packaging?

Objectives

Explain the importance of organizing files in the research compendium

Apply the TIER Protocol to organize files within a research compendium

Research Compendium Packaging

When preparing research materials to submit to a trustworthy repository for long-term preservation and sharing, it is important to package the materials in a way that enables other researchers to easily understand the contents of each artifact contained in the compendium and their function in relationship to one another. This can be accomplished with logical file organization, standard file naming schemes, and file version control.

File Organization: The TIER Protocol

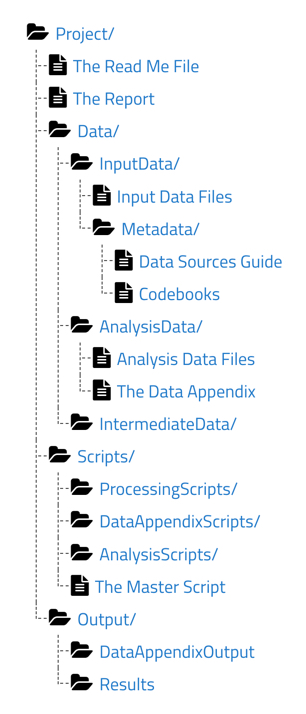

The TIER (Teaching Integrity in Empirical Research) Protocol offers a framework that specifies which files should be included in a research compendium, what those files should contain, and how those files should be organized in a hierarchical file folder structure. The TIER Protocol was designed to support four specific standards for computational reproducibility:

-

Sufficiency. The research compendium should contain all materials necessary to computationally reproduce reported results.

-

Soup-to-nuts. The research compendium should contain all materials necessary to re-trace the computational workflow from beginning (preparing data for analysis) to end

-

Portability. The research compendium should continental all materials necessary to allow for computational reproduction on any computer workstation.

-

(Almost) one-click reproducibility. The research compendium should contain all materials necessary to generate results using a single master script.

As discussed in previous lessons in the Curating for Reproducibility Curriculum, the research compendium, at a minimum, should contain a readme, dataset, codebook, and script file. The file folder granularity of TIER Protocol 4.0 goes further to reflect the reality of typical computational research that often requires several data and script files to perform all of the steps in the analytical workflow, some of which may generate intermediate files that then become part of the analysis to produce outputs.

Within the root Project/ folder are the README file (see Episode 3: Documentation Review] in Lesson 2: Curating for Reproducibilty Workflows of the CuRe Curriculum), the Report file (final article/report), and three primary subfolders each containing specific files as described below.

Data/

This folder should contain input data files containing the data obtained from a primary source or constructed from data collection/generation efforts, analysis data files containing the processed data used in the analysis, and any intermediate data files created during data processing and stored temporarily to be used in subsequent steps of the analysis.

In addition, for each input data file, there should be included a codebook that defines the variables in the dataset and a data sources guide that provides a data citation, data availability/licensing information, and instructions on how to access the data from their original sources.

For each analysis data file, the TIER Protocol also requires a data appendix. Similar to a codebook, the data appendix defines each variable. However, the data appendix also provides information on the unit of observation, basic summary statistics, and variable distributions.

Scripts/

This folder should include processing scripts used to transform input data into analysis data files, analysis scripts used to generate results presented in the final report/article, and data appendix scripts used to produce results presented in the appendix of the final report/article.

To help achieve (almost) one-click reproducibility, the TIER Protocol also recommends a master script file, or main script file, (see Episode 3: Code Inspection in Lesson 3: Reproducibility Assessment of the CuRe Curriculum) that runs all of the scripts in the proper order with as little human intervention as possible beyond executing the main script to produce the results presented in the report/article and the appendix.

Output/

This folder should contain results files that include the figures, tables, and any other results presented in the final report/article as well as data appendix output files that include the figures tables and any other outputs presented in the appendix of the final report/article.

Learn more about the TIER Protocol here:

https://www.projecttier.org/

It is the rare researcher who routinely keeps their compendium files in a neat folder structure like the one suggested by the TIER Protocol. More often, files are delivered in a single folder with little information on what each file contains. The next challenge will give you a chance to transform a collection of files into an organized research compendium.

Exercise: TIER(s) of Joy

As this episode explained, organizing files in a logical manner supports reproducibility by making it more evident the purpose of each file and the relationships among them. This is useful during curation for reproducibility workflows that require understanding of the analytical workflow in order to re-execute the analysis to confirm reproducibility of results.

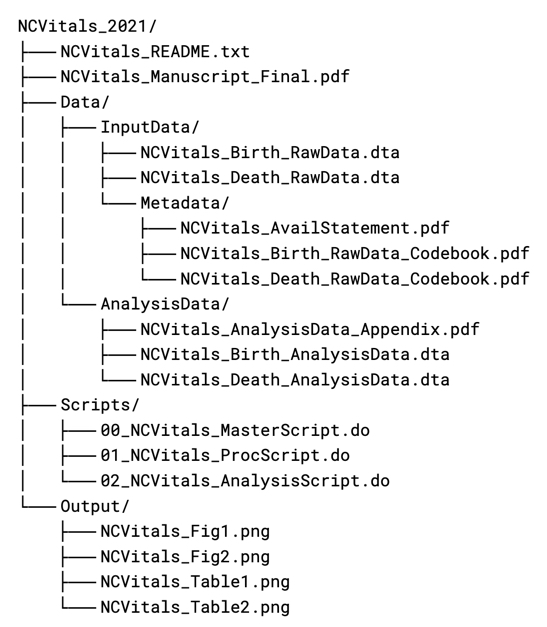

The file list below was extracted from the original README.txt included in a research compendium submitted for curation. Organize the study files in a logical folder structure as outlined by TIER Protocol 4.0.

Solution

solution

File Naming

A good filename gives anyone who encounters the file an immediate sense of what it contains and its function among other related files. By establishing and consistently applying a standard file naming convention, researchers, collaborators, and future users of the research compendium can more easily locate, understand, and use the compendium files.

Filename elements

An effective file naming convention prescribes standardized filenames composed of descriptive elements that uniquely and concisely identify each file. A convention might require some of the following elements:

- short study title or study acronym

- study location

- sample

- content type

- file production/update date

- researcher initials

- file version number

Filename formatting

File naming conventions should also take into account the computing environment in which the files will be rendered and used. Some operating systems and software applications impose character limits for object names or treat characters in peculiar ways during certain operations. To avoid problems as a result of this, file naming conventions should also establish formatting standards for filenames and specific elements within filenames such as those below:

- Keep filenames as short as possible.

- Avoid the use of spaces and special characters.

- Use underscores or camel case to enhance human readability.

- Apply the ISO 8601 (YYYYMMDD) format for dates.

- Include leading zeros for sequential file numbering.

The example below shows the application of a file naming convention to files contained in a research compendium.

Version Control

During the course of the research lifecycle, data are being manipulated, scripts are being updated, and documentation is being revised. Recurrent changes made to files in the research compendium reflect the iterative nature of research, which results in the accumulation of several versions of a given file. Version control not only keeps track of each version to make it easy to identify the precise active files that should be used to perform a task, but also it makes it possible to revert to prior versions of a file should issues with the active file make it necessary. With regard to computational reproducibility, being able to identify the precise files to be used to execute the computational workflow to generate reported results is critical.

Version control can be as simple as appending standardized file version information to the file name, with the final version limited to read-only access.

NCVitals_Birth_AnalysisData_20210213_v01.dta

NCVitals_Birth_AnalysisData_20210216_v02.dta

NCVitals_Birth_AnalysisData_20210522_v03.dta

NCVitals_Birth_AnalysisData_20210629_v04FINAL.dta

For research processes that require frequent file updates, the use of a file storage and management system that automates the assignment of version numbers to files as they are created and updated (e.g., Box, Open Science Framework) can reduce the effort of tracking versions of files.

The often non-linear nature of computational research can make version control of script files a difficult task. Version control systems like Git, GitHub, and Bitbucket are becoming more popular among researchers as a tool for tracking changes to script files as they write and revise their code. These tools have automated processes for storing iterations of files while also capturing information about each iteration (when it was created, who created it, what changes were made to it). Git is especially useful for collaborative projects because it also helps to synchronize programming activities among collaborators, who otherwise would require careful coordination to ensure the correct files are being used.

It is important to put into practice what one learns as they learn it to not only retain but begin making it a habit. Let’s try it here by practicing file naming.

Exercise: What’s in a (File) Name?

How would you rename the files below to make it easier for researchers to have a better sense of their contents and function, be able to track file versions, and ensure the filenames are machine-readable?

parent-add health study wave 3_nov19.csvaddhealth_child_w3_10-24-22.csvchild-add health study-wave3 final.csvSolution

addhealth_w3_parent_20221119.csvaddhealth_w3_child_20221024.csvaddhealth_w3_child_final.csv

Key Points

It is important to package research artifacts into a research compendium that uses a logical file folder structure and standard file naming conventions to enable others to make sense of the files and the functional relationships among them.

The TIER Protocol is a useful framework for organizing compendium files that supports four essential computational reproducibility standards: sufficiency, soup-to-nuts, portability, and (almost) one-click reproducibility.

Research is a non-linear process that often requires frequent file revisions. Version control is critical to ensure that the files included in the compendium are the precise ones that can be used to reproduce reported results.

Terms of Use

Overview

Teaching: 0 min

Exercises: 0 minQuestions

Why is it important to define acceptable uses of the research compendium?

What is the best way to communicate acceptable uses of the research compendium?

How do I determine which type of license to apply to my compendium materials?

Objectives

Understand the importance of articulating acceptable or conditional uses of the research compendium using a license.

Be able to select an appropriate license for a particular research artifact based on its type and specified acceptable uses.

Terms of Use

Ideally, researchers would make their research compendium maximally available by placing the materials into the public domain to allow for unrestricted access and reuse. However, there are various justifiable reasons why this may not be the best option based on applicable laws or professional motives. Datasets that contain sensitive information about human subjects or that are bound by licensing restrictions due to their proprietary nature cannot be shared publicly. A researcher may want to receive formal credit or attribution for creating the materials from others who use them.

Whether a researcher decides to place the research compendium in the public domain or place restrictions and/or conditions on access or use of the research compendium, it is important to articulate for what purpose the materials may be used along with any obligations that come with using the materials.

Licenses

For researchers who have full ownership of their materials and the materials do not present privacy concerns, licenses can be a useful tool for sharing materials without requiring others to request formal permission to use the materials.

A license is a formal document that defines acceptable uses of a work by granting permission and setting conditions. Whereas copyright (as it is defined and enforced by U.S. copyright law) can act as a legal roadblock to reproducibility by conferring exclusive use rights to the creator of research materials, licenses remove this roadblock while still providing the creator a legal framework for enforcing specified acceptable uses and/or conditions for use of the materials.

Spotlight: Licensing “Facts”?

When it comes to applying licenses to research data, questions have been raised about whether or not United States Copyright law allows it. According to 37 CFR Part 202.1, certain materials are not subject to copyright, including “works consisting entirely of information that is common property containing no original authorship, such as, for example: Standard calendars, height and weight charts, tape measures and rulers, schedules of sporting events, and lists or tables taken from public documents or other common sources.” In other words, facts–which often are how data are described–are not copyrightable. For copyright law to apply, a work must be an authored work that is both original and sufficiently creative.

It has been successfully argued that research data can be shown to meet this standard of copyrightable works when originality and creativity is expressed in terms of the author’s selection and arrangement of the data within a database (see Feist Publications, Inc. v. Rural Telephone Service Co., Inc., 499 U.S. 340 (1991)). Therefore, while the facts themselves are not subject to copyright, licenses can be applied to structured databases–i.e., datasets–that contain facts.

Read more about the nuances of licensing research data here:

Stodden, V. (2009). Enabling reproducible research: Open licensing for scientific innovation. International Journal of Communications Law and Policy, 13, 22–47. Retrieved from https://ssrn.com/abstract=1362040Carroll, M. W. (2015). Sharing research data and intellectual property law: A primer. PLOS Biology, 13(8), e1002235. https://doi.org/10.1371/journal.pbio.1002235

Creative Commons. (2019, October 23). Data. Creative Commons Wiki. https://wiki.creativecommons.org/wiki/Data

Selecting a License

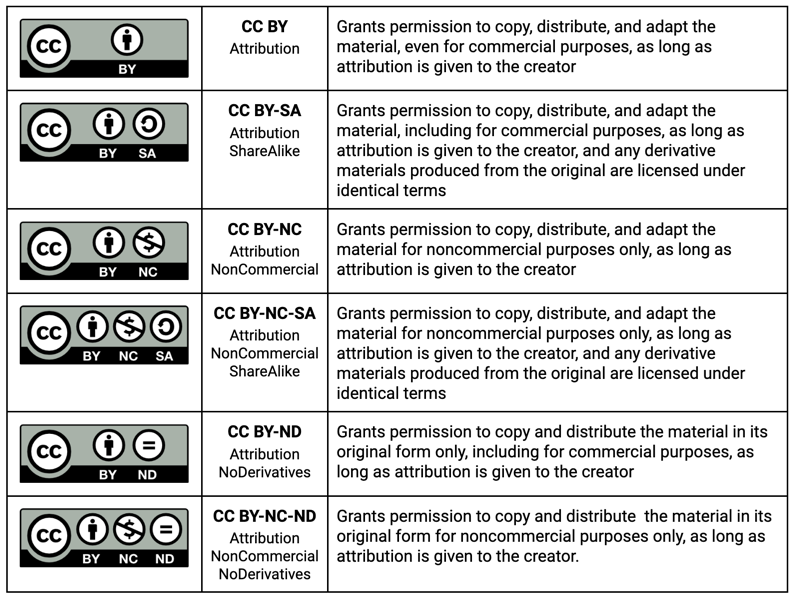

Creative Commons licenses have become widely recognized for its ease of use and understandability. Creative Commons was founded in 2001 as a non-profit organization helping creators make their works publicly available for use under certain conditions and without the encumbrances of copyright.

Creative Commons licenses offer many advantages because they allow creators to select from a range of licenses with different degrees of permissiveness, they offer standardized machine-readable legal text along with easy-to-understand summaries, and they are enforceable worldwide. Below are the license options offered by Creative Commons (version 4.0 International).

For researchers who may want to place their materials into the public domain without any restrictions or conditions of use, Creative Commons offers the CC0 public dedication tool.

By applying CC0 to their materials, the researcher grants permission to distribute, remix, adapt, and build upon their materials for any purpose and without conditions.

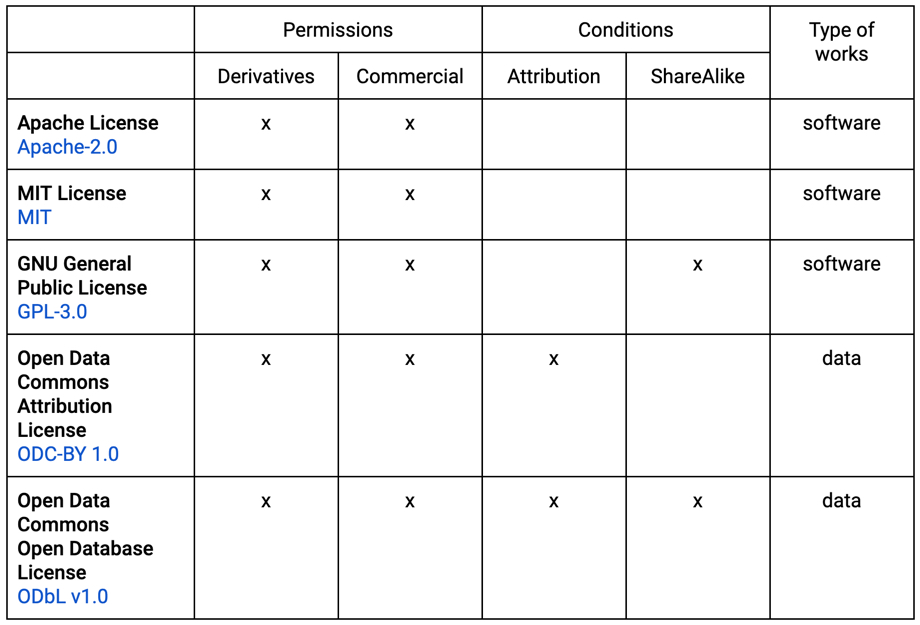

Besides Creative Commons, there are numerous licensing options for researchers to choose from, each allowing declaration of certain use conditions that may not or may not be relevant to a particular type of material. The table below presents a list of other widely used licenses, the permissions and conditions the licenses define, and the types of works to which the licenses are commonly applied.

As with previous episode challenges, putting abstract concepts into action and discussing the reasons behind the choices can strengthen our understanding and confidence in applying those concepts. The next challenge will have you drawing from what you learned about licenses to select an appropriate license for materials included in a research compendium.

Exercise: Pick a License

Compendium description and context.

For each research compendium object listed below, use the Public License Selector tool (http://ufal.github.io/public-license-selector/) or other available resource to select the most appropriate license for the object in the compendium given the type of object and applicable ethical/legal considerations. How would you explain to a researcher why the selected license is appropriate for the given object?

- dataset

- code

- software package

Solution

dataset

solutioncode

solutionsoftware

solution

Discussion: Principles of Scientific Licensing: The Reproducible Research Standard

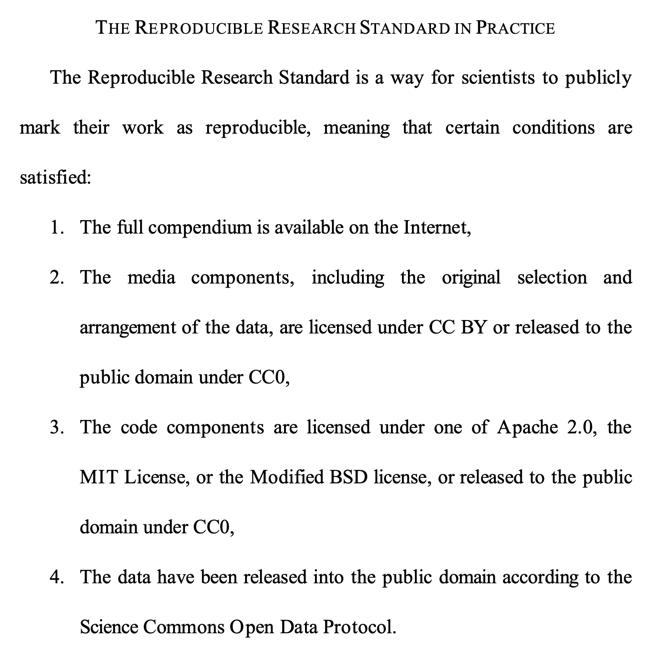

Considering the effect licensing can have on establishing scientific norms for sharing research outputs, Victoria Stodden (2009) proposed the Reproducible Research Standard (RRS) as a structure for ensuring that scientific works are made publicly available under licensing terms that promote openness required for reproducible research:

The RRS proposes that the research compendium be made available to the public under licensing terms that give others permission to copy and use the compendium. Doing so follows the Principle of Scientific Licensing, which states that “Legal encumbrances to the dissemination, sharing, use, and re-use of scientific compendia should be minimized, and require strong and compelling rationale before application” (p. 28).

Thinking about the RRS and the Principle of Scientific Licensing, discuss answers to the following questions:

- Why might a researcher insist that they can/will not implement the RRS for their research compendium?

- What response might you give to that researcher to encourage them to meet the conditions of the RRS?

- What are some “strong and compelling rationales” for applying legal encumbrances to sharing of a compendium?

Stodden, V. (2009). Enabling reproducible research: Open licensing for scientific innovation. International Journal of Communications Law and Policy, 13, 22–47. Retrieved from https://ssrn.com/abstract=1362040

Discussion Points

There are several possible reasons a researcher may insist that they can/will not implement the RRS for their research compendium. Here are some examples:

- The researcher does not hold the copyright to their materials. For example, the data used in their analysis may have been purchased from a commercial data producer.

- The researcher is a champion of Open Science and does not want to encumber their materials with a license. They want anyone who wants to access and reuse the materials can do so without any restrictions.

- The researcher has plans to conduct follow-up studies using their code and data. By allowing anyone to access the materials, the researcher fears that their research will be scooped before they have a chance to publish their findings.

Responses to the above examples may include the following:

- It is possible that the data producer allows redistribution of at least parts of the data used in the analysis. If not, other parts of the compendium, such as the code used to generate the analysis dataset from the original data can be licensed. Be sure to include detailed information on how others can access the data from the original producer.

- Licenses do not have to be restrictive at all. In fact, licenses give others explicit permission to use the materials without restrictions if so desired. By assigning a permissive license to the materials or placing the materials in the public domain under CC0, you are supporting Open Science by signaling to others that the materials are being offered for maximum reuse.

- Providing access to your research compendium affords the community the opportunity to examine the evidence underlying research findings, which is critical to reproducibility. When you provide access, you can also apply an attribution license, which makes it a requirement that anyone who uses your compendium must give you credit as the creator.

Two “strong and compelling rationale” for assigning a restrictive license to data may be that:

- The materials were used under an existing license that required users to apply the same license restrictions as a condition for using the materials (i.e., ShareAlike license).

- The data contain personally identifiable or protected health information that cannot be publicly released, and so licensing is not an option. In this case, the researcher may require users to sign a data use agreement that specifies requirements for mitigating disclosure risks and adhering to laws and regulations governing the protection of human subjects.

Key Points

Placing scientific materials into the public domain to maximize the potential for scientific reproducibility is ideal; however, there may be legal, ethical, and/or professional reasons that unrestricted sharing of the materials is not appropriate.

Licenses remove the impediment of strict copyright laws while still providing a legal framework for enforcing conditions for use of research artifacts.

While there are many different licenses available, it is important to select the license that is most appropriate for the particular artifact based on its type and the conditions under which the artifact can be used.

Trustworthy Repositories

Overview

Teaching: 0 min

Exercises: 0 minQuestions

What is a “trustworthy” repository?

What are the broad types of repositories?

How can you locate a trustworthy repository for your data?

What are things to consider as you decide on a repository?

Objectives

Identify the elements of a trustworthy repository

Navigate resources for finding trustworthy repositories across various disciplines

Learn the differences between generalist, disciplinary, and institutional repositories

Assess if a trusted repository is right for your data

What is a “Trustworthy” Repository?

As you have learned so far in this curriculum, the data curation process entails many steps that support the long-term sustainability and reuse of your research data. In this episode, you will learn why storing this compendium package in an appropriate location is a crucial step in ensuring that other researchers can find your data and research outputs and reuse them in the future. With a steadily increasing amount of data repositories available to researchers, it can be difficult to know which repository is right for your research data and will support keeping your data safeguarded and preserved. Lin et al. (2020) define a set of principles defining digital repositories that serve this function called the TRUST principles:

T - Transparency

R - Responsibility

U - User Focus

S - Sustainability

T - Technology

If a digital repository enacts these principles, you can reasonably consider it to be a trustworthy repository for your data. A helpful guiding framework for assessing if a digital repository exemplifies the TRUST principles is the CoreTrustSeal certification, which offers a catalogue of requirements that represent a trustworthy data repository for your research data. This catalogue includes 16 data repository requirements that are assessed in repositories and if the repository meets those requirements, it gains the CoreTrustSeal certification. In the “Things to Consider When Choosing a Repository” section below, the process of assessing the trustworthiness of a repository based on the CoreTrustSeal requirements are detailed.

Spotlight: Data Sharing Platforms - Are They Trustworthy Repositories?

There are many platforms available to researchers for storing and sharing their data, such as GitHub, Open Science Framework, and ArcGIS Online. However, can these platforms be considered trustworthy repositories? Information scientists and librarians often do not place these kinds of data sharing platforms in the same categories as other trustworthy repositories, despite their heavy usage as research compendium storage products. Data curators may need to discuss with researchers why a trustworthy repository is a better choice for the long-term preservation of their research compendium, based on the following reasons:

While these platforms may have high visibility in some research areas (such as geospatial scientists sharing data in ArcGIS Online), they often do not adhere to the CoreTrustSeal requirements and the TRUST principles. This means that the data may not have the same assurances for long-term preservation, governance, and transparency as a trustworthy repository.

Trustworthy repositories have dedicated personnel to support data depositors, which may not be the case with these kinds of data sharing platforms. Therefore, researchers may find themselves having to navigate data deposit issues without the advice and guidance of a data curator.

While platforms such as GitHub, Open Science Framework, and ArcGIS Online are useful project management and collaboration platforms for the research process, researchers should strive to store their research compendia in trusted repositories that fit the TRUST principles and CoreTrustSeal requirements.

Finding a Repository

Now, you will learn more about the different types of repositories available to researchers, and how to assess their trustworthiness. As funding agencies and publishing outlets continue to push for more data sharing in academic research, the amount of repositories available for this data has also grown. Choosing a trustworthy repository is important for safeguarding the long-term sustainability and reuse potential of the data. Beyond the trustworthiness of a repository, there are categories of repositories that may be more suitable for the particular type of research data you are working with, and may support more exposure and reuse of your shared data. The Digital Curation Centre offers further resources and discussion on the benefits and considerations of each of these kinds of repositories.

Disciplinary and Subject-Focused Repositories

Disciplinary and subject-focused repositories cater to data and research outputs from specific areas of study and focus, such as political science, mechanical engineering, Indigenous data, and social sciences. Examples of disciplinary repositories include:

- National Center for Biotechnology Information (NCBI), which provides access to research outputs concerning biomedical and genomic information

- Database of Religious History, a repository containing quantitative and qualitative data pertaining to religious cultural history

- Center for International Earth Science Information Network, which provides access to data concerning human-environment interactions across the world.

- Mukurtu, a platform which empowers Indigenous communities to manage, narrate, and share their digital heritage

- Inter-university Consortium for Political and Social Research (ICPSR), which maintains and provides access to an extensive archive of social science data for research and instruction purposes

Many disciplinary repositories can be found on Re3data, a registry of research data repositories that allows users to search by the research data subject (note that if a repository is included on re3data.org, it does not mean it is automatically a trusted repository and must still be evaluated for trustworthiness by the researchers).

Generalist Repositories

Generalist repositories store and provide access to a wide range of research data types and do not restrict content types by discipline. These repositories are particularly useful for researchers whose discipline does not have a repository dedicated to their area of study. Examples of curated generalist repositories include:

While many generalist repositories can be considered trustworthy repositories, researchers should still plan to evaluate each potential repository they are considering for their data.

Institutional Repositories

These repositories are associated with a particular institution, such as a university/college, research institute, or national laboratory., and are generally used to store and showcase the outputs of researchers within that institution (Callicott et al. 2016). These types of repositories generally accept research materials from all disciplines of research present at an institution, and thus can be considered generalist repositories as opposed to disciplinary repositories focusing on a specific area of research. Some schools have a single repository for all research products (such as Temple University’s TUScholarShare) while others may have separate repositories for data and other scholarly products (pre-prints, articles, Electronic Theses and Dissertations, etc.). Examples of institutional repositories include:

- KiltHub, the official institutional repository for Carnegie Mellon University, managed through the University Libraries

- Oxford University Research Archive, the institutional repository for researchers at the University of Oxford

- Stanford Digital Repository, managing scholarly outputs from researchers at Stanford University

Most institutional repositories have a dedicated support staff and a mission for preserving scholarly information, and often constitute a trustworthy digital repository. However, as is the case with disciplinary and generalist repositories, researchers should plan time in their research workflow to evaluate their repository of choice for trustworthiness.

Things to Consider When Choosing a Repository

Now that you have learned about different categories of repositories, what are some additional tips for choosing the right repository for your data? Researchers can look for the CoreTrustSeal logo when visiting the website of a potential repository they might use for storing and sharing their compendium package.

However, your repository of interest may not have the official CoreTrustSeal certification. This does not necessarily mean that the repository cannot be considered trustworthy! You can still use the 16 requirements of the CoreTrustSeal certification to review your potential repository for trustworthiness and adherence to the TRUST principles. The CoreTrustSeal certification is an example of a standard used to signal that a repository meets the requirements of the TRUST principles, but due to the extensive certification process required, some repositories may not (yet) have this certification. Therefore, reviewing a repository that does not have the CoreTrustSeal certification for its adherence to the TRUST principles is incredibly important.

Spotlight: Repository Checklist

When assessing a repository for trustworthiness and fit for your data, look for the CoreTrustSeal certification, or in the absence of this certification, consider the following questions based on the 16 CoreTrustSeal requirements. In the column with the header “TRUST Principle,” list the principle(s) that correspond to the goals of each requirement.

Question to Consider from CoreTrustSeal Requirements TRUST Principle Look for the mission/scope of the repository. Does the mission/scope discuss providing access to and preserving data? Look for the mission/scope of the repository. Does the mission/scope discuss providing access to and preserving data? What are the licenses covering data access and use in the repository? Does the repository monitor compliance of data access and use in the repository? Does the repository have a continuity plan to ensure ongoing access to and preservation of the items within? Does the repository ensure that data are created, curated, accessed, and used in compliance with disciplinary and ethical norms? Does the repository have adequate funding, staff, and governance for carrying out the mission of the repository? Does the repository have access to expert guidance and feedback beyond the repository staff which can be applied to deposits in the repository? Does the repository guarantee the integrity and authenticity of the data? Does the repository have an appraisal process to determine if the data and metadata meet certain criteria levels for deposits? Does the repository have documented procedures and processes for managing archival copies of the deposits? Does the repository assume responsibility for long-term preservation? Does the repository have appropriate expertise to address technical data and metadata quality? Does archiving take place according to workflows from ingest and dissemination? Does the repository enable users to discover the data and refer to them in a persistent way through proper citation? Does the repository enable reuse of the data over time, ensuring that appropriate metadata are available to support reuse? Does the repository function on well-supported operating systems and core infrastructural software? Does the repository have security functions which provide for the protection of the platform and its data, products, services, and users?

It is important to acknowledge that not every repository is a good fit for all data. Sometimes recommending a repository outside of your own institution is a better service than accepting something that is not a good fit. Take some time to explore a specific data set and repositories that would be a good fit for it. This hypothetical exercise will help get you out from under your repository biases.

Exercise: Locate a Trustworthy Repository for a Dataset

Dataset: Investing in Education in Europe: Attitudes, Politics and Policies (INVEDUC)

Now, let’s test out your knowledge so far from this episode by locating a trustworthy repository for the above dataset. Imagine you are the creator of this dataset, and you are looking for a repository to store this data to preserve and share it with others. Evaluate the characteristics (discipline, data type, etc.) of this dataset and use re3data.org https://www.re3data.org/ to identify three possible trustworthy repositories that are well-suited for the dataset.

Solution

In a group discussion, demonstrate how your three possible repositories demonstrate trustworthiness and fit for this sample dataset. How would you decide which repository to choose?

If you are having trouble finding appropriate repositories, consider these potential candidate repositories:

Discussion: Assessing your Repository using CoreTrustSeal Requirements

Assess one of the repositories you identified in the previous exercise on its compliance with the 16 CoreTrustSeal requirements using the repository checklist. Discuss the pros and cons of using this repository based on its compliance with the requirements: would you and your research team still consider using this repository? Are there any special considerations for what you might include in your research compendium, such as additional necessary documentation?

Key Points

The CoreTrustSeal certification is one of the benchmarks that denotes trustworthy repositories where researchers can safely store and share their compendium packages.

Researchers can choose disciplinary, generalist, or institutional repositories to store their compendium packages.

If your repository of choice does not have the CoreTrustSeal certification, that does not necessarily mean that it is not considered trustworthy. You can still evaluate the trustworthiness of a repository through the CoreTrustSeal’s 16 requirements.

Special Cases

Overview

Teaching: 0 min

Exercises: 0 minQuestions

What are some special cases to consider when publishing data and compendium packages?

What are examples of restricted and proprietary materials in compendium packages?

What are examples of complex computing research outputs that may be included in compendium packages?

How can researchers balance reproducibility goals with proper handling of restricted and proprietary materials in compendium packages?

Objectives

Identify examples of restricted and proprietary materials that may be present in compendium packages

Learn how to balance reproducibility goals with special cases of data restrictions/usage

Create proper documentation for restricted and proprietary materials in compendium packages

Special Cases in Research Compendium Sharing

In Lesson 2: Episode 3, you learned about writing appropriate documentation for your research compendium (such as README files) to aid in its reuse, based on the characteristics of your data and code. When we are working with data that we have collected ourselves and the data has no ethical or legal restrictions that prevent us from sharing it, using the guidelines listed in this curriculum to create the final compendium package and its documentation can be relatively straightforward. However, many researchers may find themselves in a situation where their research materials pose additional considerations for the sharing process, which may influence how they arrange and prepare their research compendium for sharing. These situations may include:

- Working with restricted data that has a high risk to harm an individual, group, geographical location, entity, etc. if released publicly.

- Using data that is owned by a company/not created or owned by the researcher

- Attempting to share complex research outputs such as software which may require additional documentation

In this episode, we will discuss restricted materials, proprietary materials, and complex computing outputs that may be included in compendium packages, and you will learn what additional considerations may need to be made when depositing these materials into a trustworthy repository.

Restricted Materials

In the context of this curriculum, we can consider restricted materials to constitute data, software, and any other research ephemera that meets the definition of “restricted.” The definition of “restricted” may vary across organizations and contexts. For example, the Atomic Energy Act of 1954 defines restricted data as all information pertaining to the design, manufacture, or use of atomic weapons, the production of special nuclear material, the use of special https://www.nia.nih.gov/research/dbsr/access-restricted-data) define restricted data as “data that cannot be released directly to the public research community due to possible risk(s) to study participants as well as the confidentiality promised to them.” Researchers should take special consideration in understanding what constitutes restricted data in their research context. What constitutes a restricted material may be influenced by:

- Your organizational affiliation and where your organization is located

- Who your collaborators (if any) are

- Who is funding your research

Proprietary Materials

Proprietary materials (such as data, software, etc.) are those which may be used in the research process to achieve a certain result, but are not owned by the researcher and thus generally cannot be shared openly in a compendium package. This may include data that is purchased or loaned from a company, software code developed by a private company, or other research tools in which the researcher has to specially request its use in their project.

Complex Computing

Sharing a research compendium that contains complex computing materials such as software built by the researcher requires additional considerations for curating the package for reproducibility.

- Does the repository where you are storing the research compendium have sufficient infrastructure for storing/providing access to your software?

- Does your documentation adequately explain how reusers can install and use the software?

- Does your documentation outline a clear plan about how reusers may be made aware of any updates made to the software?

Spotlight: Know Your (Repository’s) Limits

Some repositories may not have the technical capability to host data and code where the access must be restricted and cannot be made publicly available with no access limitations. As you use the knowledge you learned from Lesson 4: Episode 3 to choose appropriate repositories, consider if a repository can host your restricted materials, proprietary materials, and complex computing products in a manner that balances reuse with protection of their special considerations.

Practice makes better. The more you exercise the better practices being exposed in the episodes, the more it will become engrained and natural to do. Here is another opportunity to put into practice what has been learned about READMEs, their functionality and importance.

Discussion: Balancing Reproducibility with Proper Data Sharing Procedures

As you learned in Lesson 1 from the definitions of empirical, statistical, and computational reproducibility, the factors needed to achieve reproducibility varies depending on the research context. In each of the scenarios below, consider how the researcher might balance their goals in making their work reproducible while adhering to proper procedures around restricted materials.

Scenario 1: Restricted Data with National Security Considerations

You work at a National Laboratory and are finishing up a research project which was dual-funded by the National Science Foundation (NSF) and a federal government agency. As a part of your NSF funding, you are required to share your research data from the project, but the federal agency which co-funded the project stipulates that the data has national security considerations and cannot be shared publicly. What do you do? Are there ways you can still share some of your research outputs to encourage reproducibility while satisfying the requirements of both organizations?Scenario 2: Data Purchased from a Company

You have just received a grant from a funding agency to study the television viewing habits of teenagers in your country, using a dataset you purchased from a media company that tracks television viewing patterns for various media networks. As of a year later, you have successfully completed your project and you are getting ready to put together your final research outputs and close out the grant project. You see that your data has to be shared in order to satisfy the grant’s requirements, but you are not sure if you can share the data that you purchased from a company. What questions do you need to ask in this situation? What are some solutions to this problem, or possible compromises?Scenario 3: Sharing Lab-Built Software

You are a graduate researcher in the McMurray Lab, where you have been working on developing software that collects footage from eSports tournaments and prepares machine learning training data from the footage. The PI of the lab has asked you to develop the compendium package to share the software and associated documentation to satisfy mandates from the funder of the project. The software requires a very specific computing environment to function, and you are concerned about whether other people will be able to use the software properly after downloading it from the repository. What should you consider as you prepare the compendium package?Solution

While there are no discrete correct answers for each of these cases, there are a few possible considerations to explore for each of these scenarios.

Scenario 1: Is it possible to share a metadata-only description of your research data in a repository, and in the accompanying documentation include information on how the full dataset can be accessed from the federal agency? In this case, useful information about the data has still been shared with potential reusers, and they have been given guidance on the additional steps they need to take to access the full dataset since it cannot be fully publicly shared.

Scenario 2: In the same vein as Scenario 1, will the company allow you to share a metadata-only description of the purchased data in a repository, and in the accompanying documentation include information on how the full dataset can be purchased directly from the company? If this is not allowable, can the company offer any compromises on how their intellectual property can be protected while also allowing you to satisfy your data sharing requirements? Who might be possible representatives of the company who can help answer these questions? The company’s legal department may be helpful in exploring this.

Scenario 3: Since the data requires extremely specific computing environments to function, make sure your README is incredibly detailed in describing what the reuser needs to do to create this computing environment, and be sure to list the contact information for who to contact from the research team in case the software malfunctions or becomes obsolete. While the goals of data curation are to reduce how often the original researchers need to be contacted by data reusers, in cases with complex computing, it may be unavoidable.

Exercise: Create a README for a Compendium Package with Special Cases of Data and Code

In Lesson 2: Episode 3, you learned about the specific elements needed in a README file. We will now ask you to apply this information towards creating a README file for a research compendium containing special cases of data and code. You will not be creating each section of the README, but rather discussing what major key areas need to be included in the document based on the below scenario.

Scenario: You and your lab have created software that can be used to analyze biological data from lobster populations to identify the geographic extent of overfished areas in the ocean. This software has the potential for wide-reaching impacts on policies around the commercial fishing industries and sustainability initiatives in coastal areas. Therefore, you and the other researchers want to share this software publicly to support its widespread reuse. However, you have concerns about sharing the software as it requires an extremely specific computing environment, and you are unsure if other researchers will be able to duplicate this computing environment without the specialized equipment used in your lab to run the software. At your weekly lab meeting, you and the team discuss what needs to be included in the README accompanying the software in order to support the reuse of the software.

The following questions are examples of what to consider when creating a README in this case of sharing complex computing products:

- How will you clearly define the specific computing environment needed to run the software? Are there any specific

- Will you include your contact information to allow researchers to get in touch if they have trouble running the software?

- Do you plan to do regular updates of the software to make sure all dependencies and code continue to work? If so, how will you reflect this information in the README to ensure that researchers know how to access the latest version of the software?

Solution

Discuss how you and the team will design the README for this complex computing product. What additional information will need to be included in the README given the complexity of the software? While there are no discrete correct answers for this challenge, your discussion may include the following:

- How the complex computing environment (operating system and version number, other software dependencies needed, etc.) will be described in the README

- Any sections you will add/omit to the README to support the effective reuse of the software

- Any explanations/descriptions you will include in the README that gives explicit instructions on installing and using the software

Key Points

When putting together compendium packages, researchers should be aware of any restricted, proprietary, or complex computing materials they are working with.

Restricted materials cannot be fully released to the public due to the high risk they can pose.

Proprietary materials are those which are not owned by the researcher, and generally cannot be shared publicly.

Complex computing materials such as software may require additional considerations when included in compendium packages, such as documentation outlining its development and use.

When working with any of these special cases, researchers should allow for extra time in their process of compiling their compendium packages to ensure they are balancing reproducibility goals with proper precautions.